A Researcher's Guide to Transcription in Qualitative Research

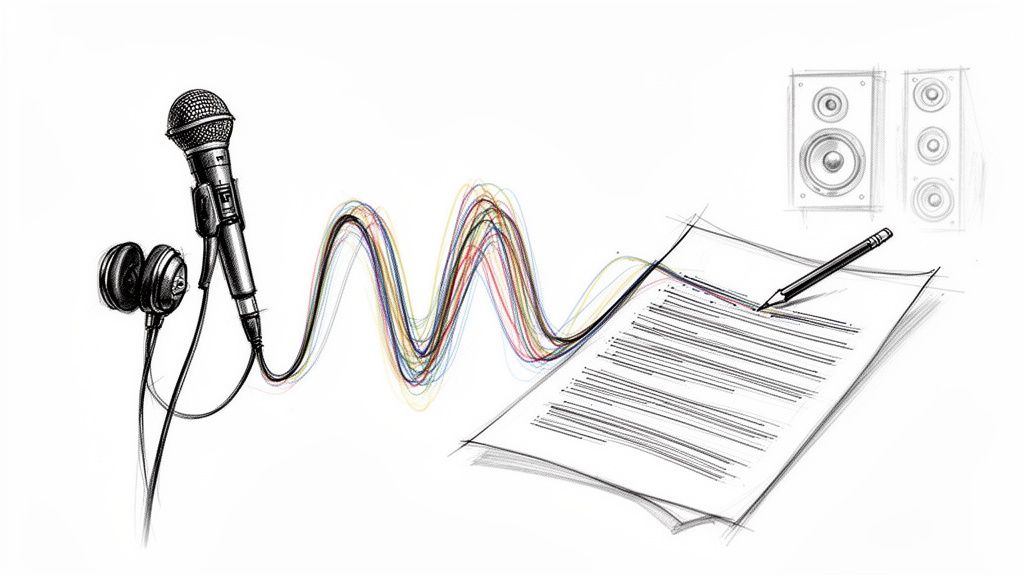

At its heart, transcription is the simple act of turning spoken words from your interviews, focus groups, and field recordings into written text. But in qualitative research, it’s so much more than just typing what you hear. From my experience, it’s the very first, and arguably most critical, step in making sense of your data. This process transforms fleeting audio into a solid, searchable, and analyzable dataset, laying the groundwork for every insight you’ll uncover.

Why Transcription Is the Bedrock of Qualitative Research

Think about it this way: trying to analyze hours of audio recordings without a transcript is like trying to navigate a new city without a map. You might remember a few key landmarks, but you'll miss the subtle details, the side streets, and the overall structure of the place. You're relying entirely on memory, constantly rewinding and re-listening, hoping to catch that one crucial phrase.

The process of transcription in qualitative research is what creates that map. It takes the abstract flow of conversation and turns it into concrete, tangible data that you can systematically explore, code, and revisit time and again.

This simple conversion is what truly unlocks your data's potential. It lets you move past just hearing what was said and allows you to dig in, analyze deeply, and build your findings on a solid, evidence-based foundation.

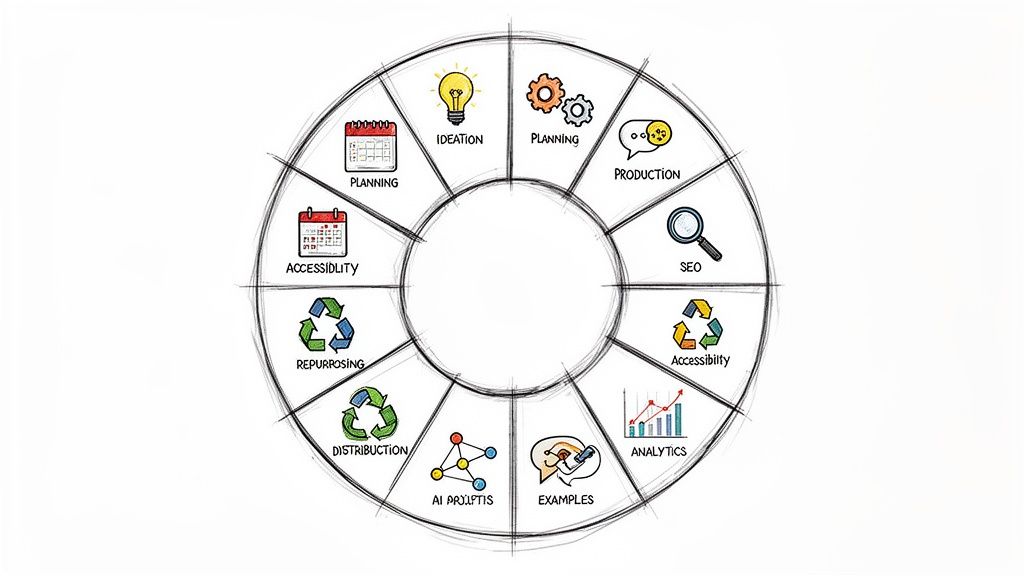

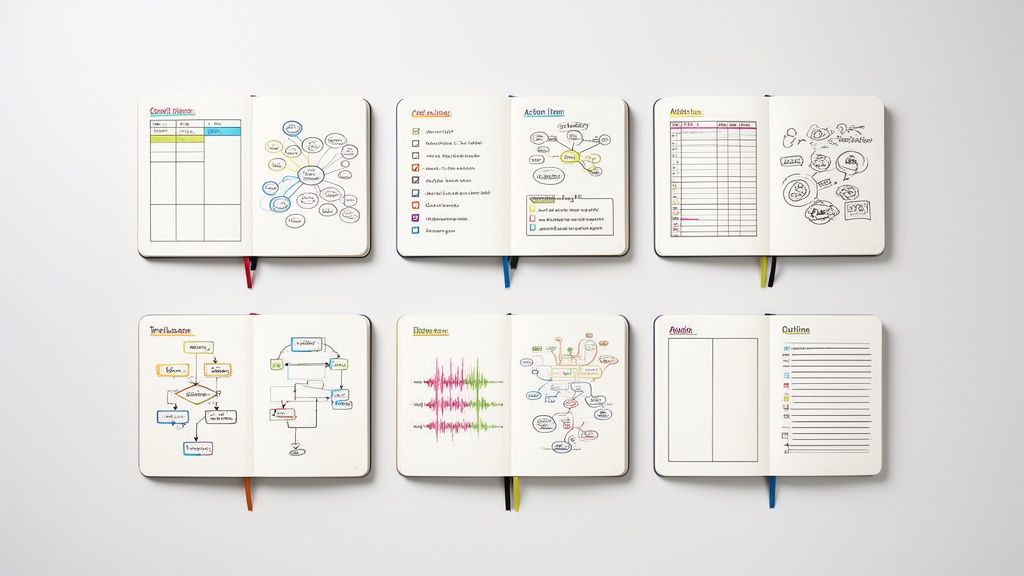

The Role of Transcription at a Glance

To put it simply, transcription is the bridge between collecting your data and analyzing it. The table below breaks down its essential functions and how they directly benefit your research.

Ultimately, a good transcript allows you to work with your data far more efficiently and rigorously than you ever could with audio alone.

Turning Spoken Words into Analyzable Data

The main job of a transcript is to make spoken language ready for deep analysis. You can listen to an interview for an hour, but reading that same conversation gives you a completely different level of insight and control.

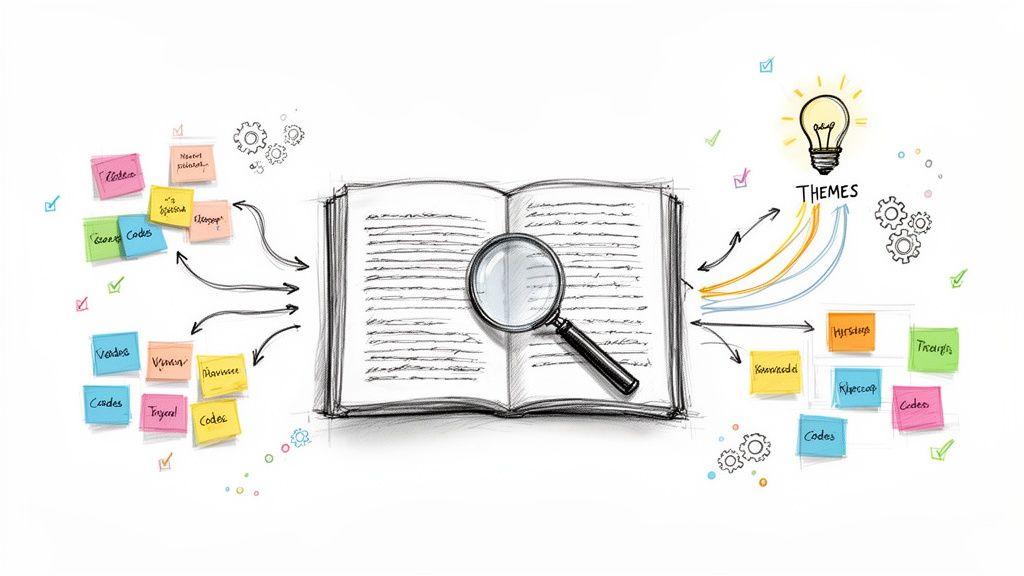

A written transcript gives you the power to:

- Spot Themes and Patterns: You can easily highlight words, phrases, and ideas that pop up again and again across different interviews—something that’s nearly impossible to do accurately by just listening.

- Make Coding Possible: Applying codes or labels directly onto the text is the cornerstone of most qualitative analysis. You can learn more about these methods in our detailed guide to qualitative research analysis methods.

- Search for Keywords: Need to find every time a participant mentioned "budget constraints"? A simple text search will take you there in seconds, saving you hours of scrubbing through audio files.

Ensuring Accuracy and Verifiability

A transcript is more than just a tool for analysis; it's a permanent, verifiable record of your research. It's the objective evidence you can point to, strengthening the credibility and rigor of your entire project.

A detailed transcript anchors your findings in the participants' own words, providing the direct evidence needed to support your interpretations and conclusions. This makes your research transparent and defensible during peer review.

This process is so fundamental to modern research that it’s created a massive industry. The global transcription services market was valued at an incredible USD 31.9 billion in 2020 and is on a steady growth path. This figure alone shows just how essential transcription has become for turning raw conversations into valuable knowledge in both academic and business settings.

Choosing the Right Transcription Style for Your Analysis

Once you have your audio recordings, you’ve reached a critical fork in the road: how, exactly, are you going to turn that spoken audio into written text? The style of transcription in qualitative research isn't a minor detail; it fundamentally shapes the data you’ll be working with and, ultimately, the insights you can pull from it.

Think of it like choosing the right lens for a camera. Are you trying to capture a raw, unedited candid shot with every imperfection intact? Or do you need a professionally retouched portrait that’s clean and focused? Maybe you need a glossy, polished image ready for a magazine cover. Each serves a purpose, and your research goals will tell you which one to pick.

Let’s walk through the three main transcription styles so you can make the right call for your project.

Verbatim Transcription: The Raw, Unfiltered Data

Verbatim transcription is the most literal and exhaustive style you can choose. It’s a painstaking process of capturing every single sound uttered by your participants, creating a complete and unfiltered record of the conversation.

This meticulous approach includes:

- Filler words: Every "um," "ah," "like," and "you know" is typed out.

- False starts and stutters: If someone starts a sentence, stops, and then rephrases it, both attempts are recorded.

- Pauses and interruptions: Meaningful silences, people talking over each other, and other interruptions are all noted.

- Non-verbal cues: Sounds that add context, like [laughter], [sighs], or [coughs], are typically included in brackets.

You'll want to use this style when how something is said is just as important as what is said. For instance, in a study about anxiety, a participant's hesitation—marked by lots of filler words and pauses—could be a crucial piece of data. This makes it the gold standard for discourse analysis, conversation analysis, and any research focused on the subtle nuances of speech patterns.

Intelligent Verbatim: The Researcher's Go-To

Intelligent verbatim (also known as clean verbatim) strikes a practical balance between complete accuracy and sheer readability. It faithfully captures what was said but cleans up the text to make it much easier to analyze. For most qualitative research projects I've worked on, this is the default and most practical choice.

The whole point here is to cut out the "noise" that doesn’t add real analytical value, letting you get to the heart of the matter faster.

An intelligent verbatim transcript is a lightly edited version of the original audio. It omits all the filler words, stutters, and repetitions that can clutter the text, allowing the researcher to focus directly on the core meaning and content of the participant's statements.

For example, a spoken sentence like, "Well, I, uh, I guess I thought... you know, maybe the project would, like, be finished sooner," simply becomes: "I guess I thought the project would be finished sooner." The meaning is identical, but the text is far cleaner and easier to code. This style is perfect for thematic analysis, content analysis, and narrative inquiry, where the ideas and experiences being shared are the main event.

Edited Transcription: The Polished Final Product

Edited transcription takes the cleanup to the next level. This style doesn’t just remove the ums and ahs; it also corrects grammatical errors, smooths out awkward phrasing, and polishes the text so it’s ready for a formal audience. The end goal is a highly readable and professional document.

When would you use this? It’s all about presentation. This is your best bet when the transcript itself is meant to be shared or published.

Think about using it for:

- Quoting a participant directly in your final report or publication.

- Creating public-facing content like website articles or case studies.

- Sharing interview highlights with stakeholders who need a quick, clear summary without the messiness of raw speech.

Here, the priority shifts from word-for-word accuracy to clarity and professionalism. While it’s not the right tool for deep linguistic analysis, it’s brilliant for making your qualitative data presentable and easy for any reader to understand.

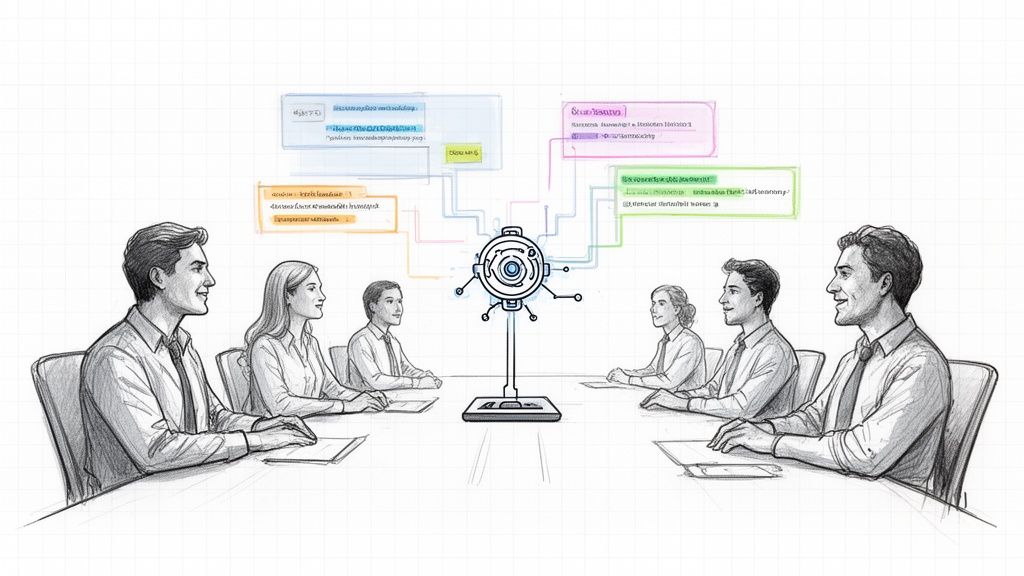

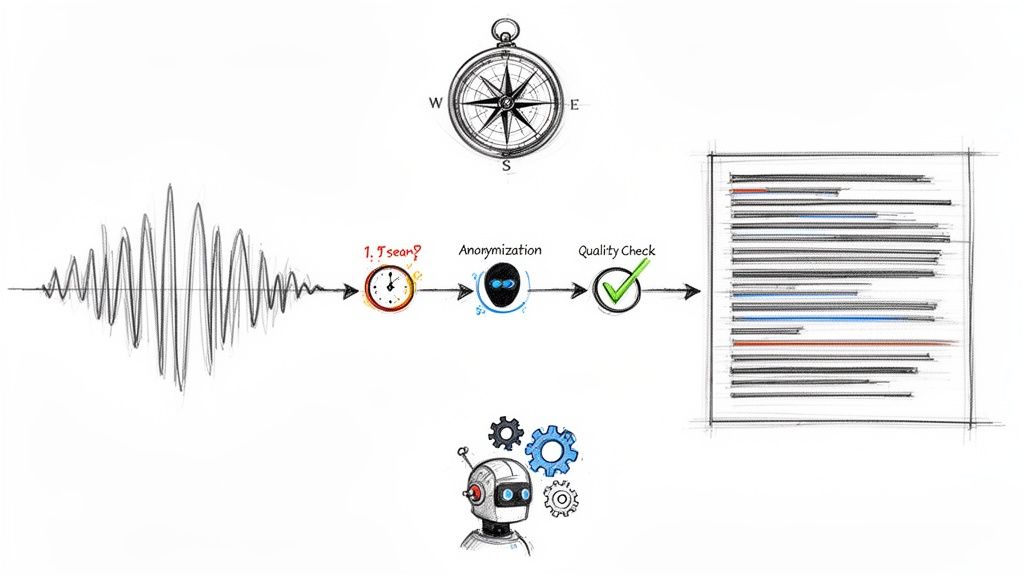

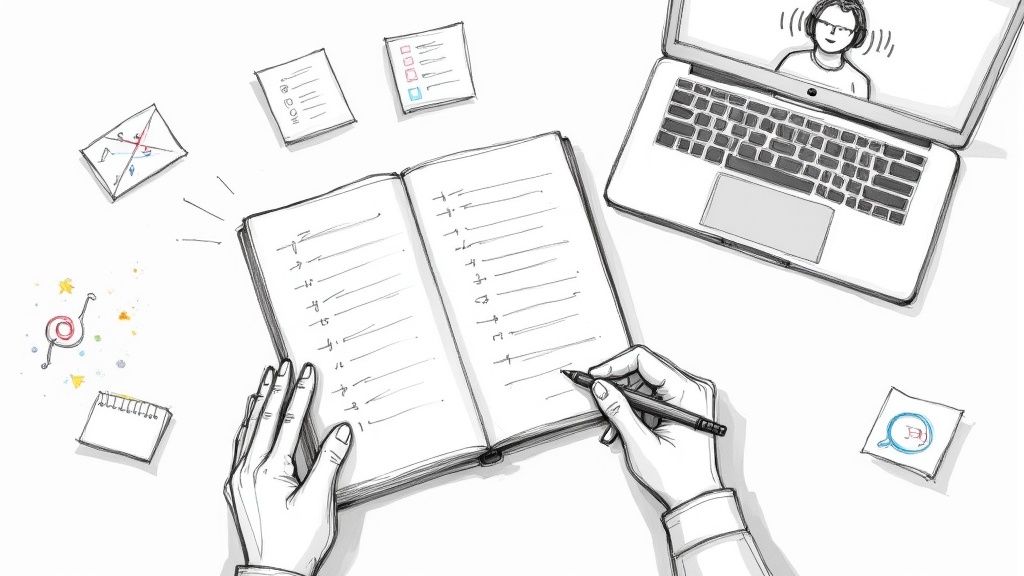

Comparing Manual and AI-Assisted Transcription Workflows

Getting from a raw audio recording to a clean, usable transcript used to be a long and painful journey. For decades, researchers really only had one choice: the grueling cycle of listening, typing, rewinding, and listening again, for hours on end. But today, technology has given us a completely different way to work—one that changes how we interact with our data from the very start.

This new approach isn't about letting a robot take over. It’s about giving the researcher superpowers. By pairing the incredible speed of artificial intelligence with the sharp, nuanced eye of a human expert, the AI-assisted (or hybrid) workflow turns transcription from a dreaded chore into an active, engaging part of the analysis itself.

The Old Way: Manual Transcription

Think of a medieval scribe painstakingly copying a manuscript by hand. That’s not too far off from traditional manual transcription. The researcher is stuck in the role of a typist, pouring massive amounts of time and mental energy into the purely mechanical task of turning spoken words into text.

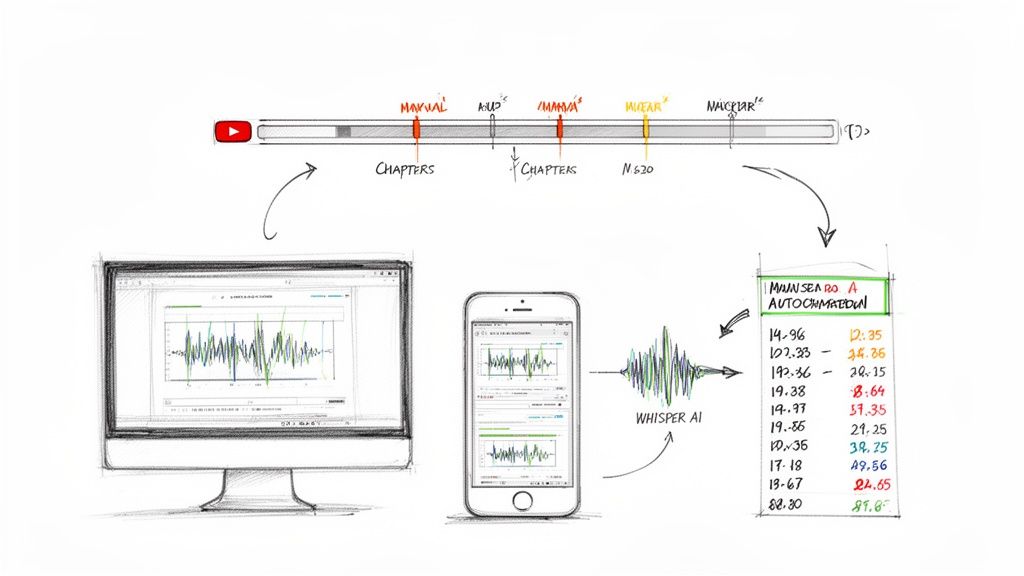

This process is a notorious time sink. It’s not uncommon for a one-hour interview to take 6 to 10 hours to transcribe manually. Some studies even found that researchers can spend up to 40% of their entire project timeline just on transcription. The arrival of powerful AI models like OpenAI's Whisper, first released on September 21, 2022, has changed the game by producing first drafts in minutes across 97 languages and accents.

While a skilled human can achieve near-perfect accuracy, the biggest downsides of the old way are the huge time commitment and the serious risk of burnout. It pulls you away from where your focus should be: making sense of the data.

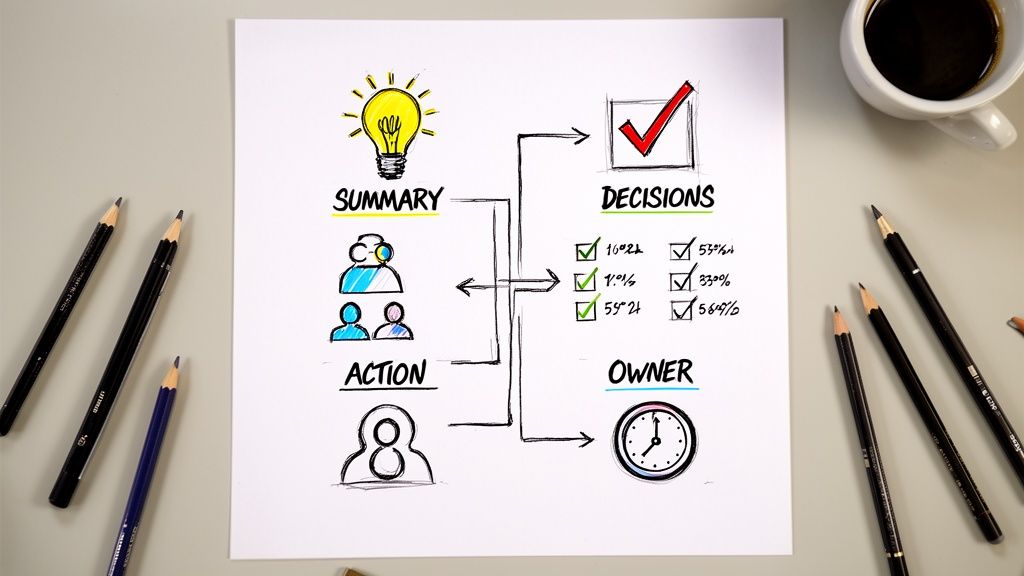

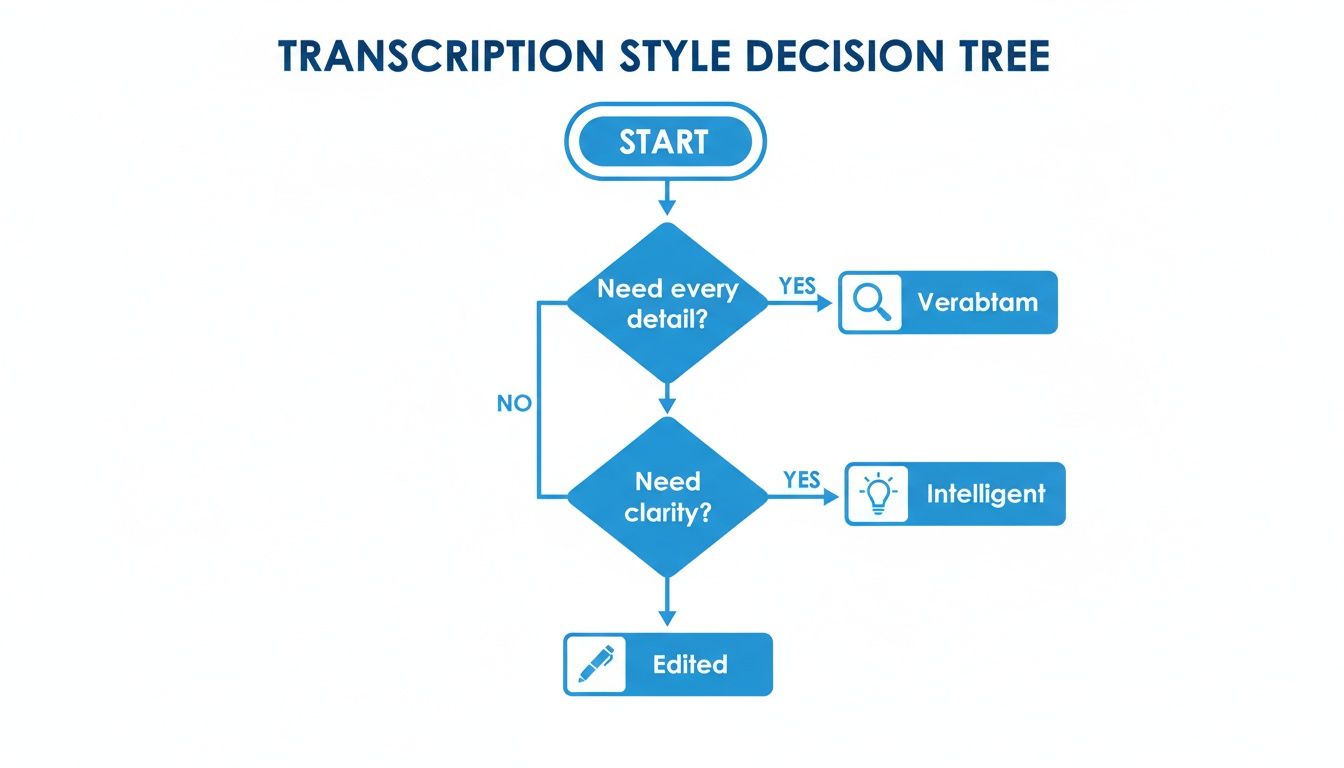

This decision tree can help you figure out which transcription style fits your project’s goals.

The main takeaway here is that your analytical needs should drive your choice. Whether you need to capture every single "um" and "ah" or you just need a clean, readable text will point you to the right method.

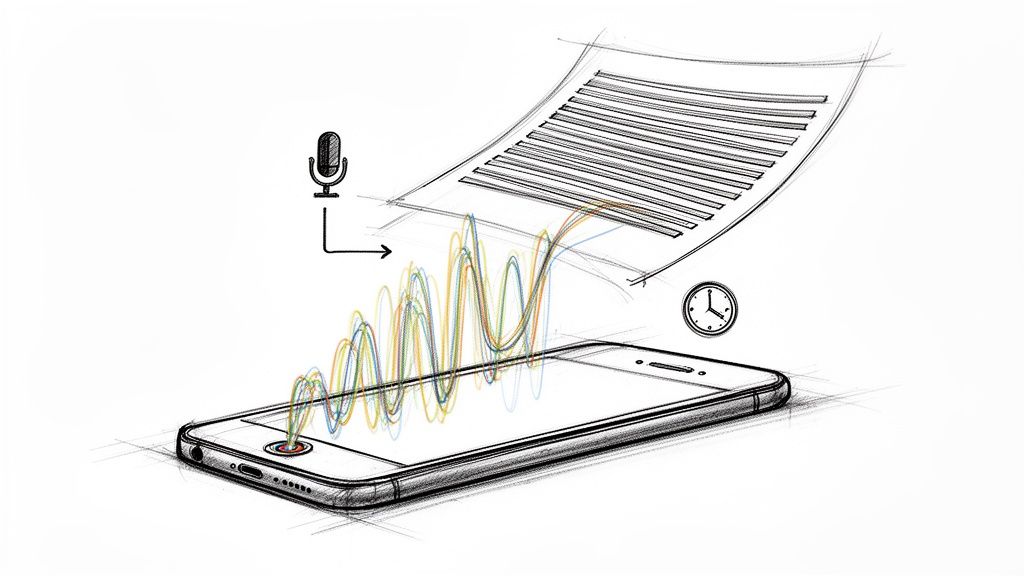

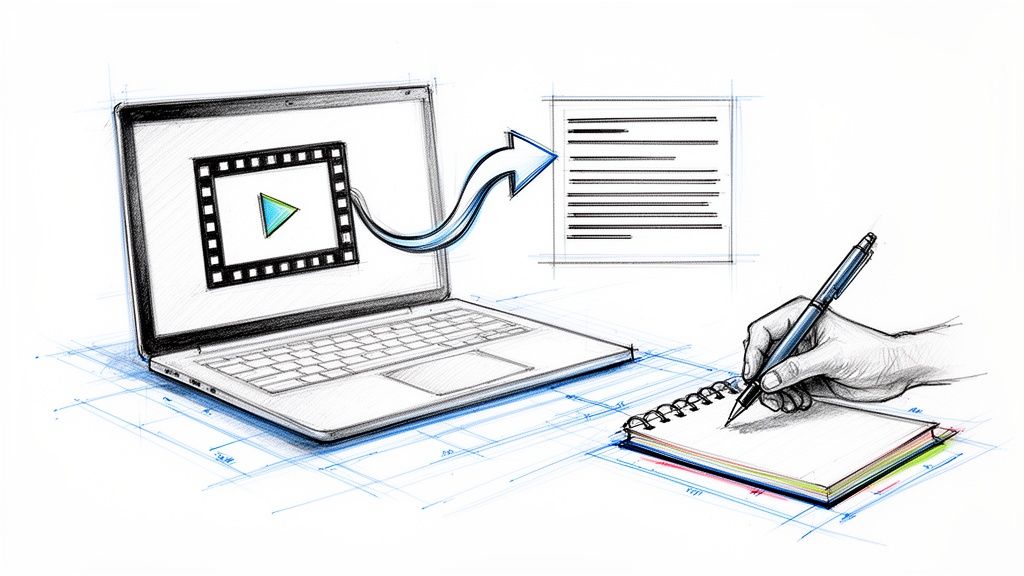

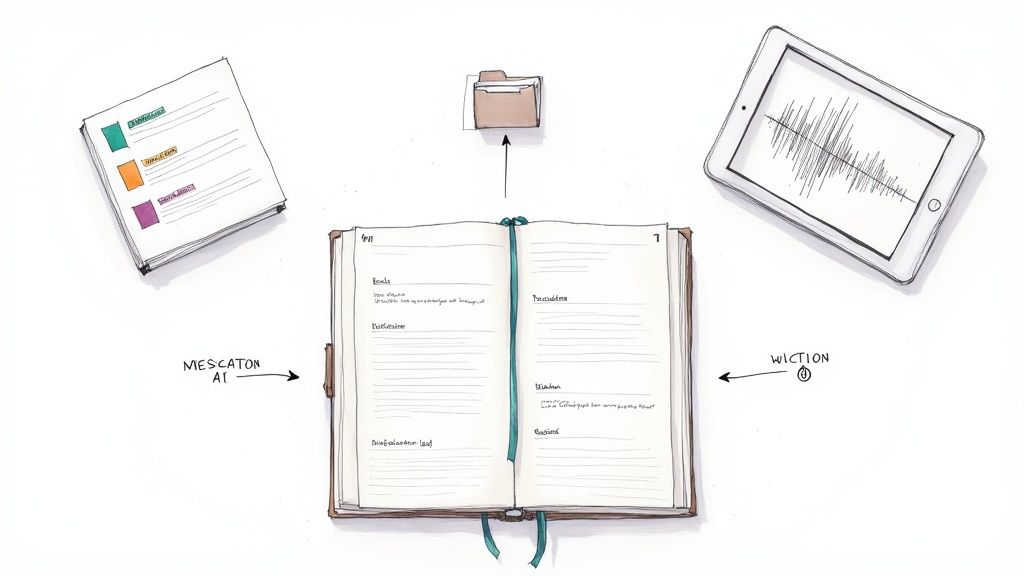

The New Way: AI-Assisted Transcription

The AI-assisted model completely flips the script. Instead of facing a blank document, you start with a nearly finished transcript generated by an AI in just a few minutes. This fundamentally shifts your role from typist to editor and analyst.

The hybrid workflow doesn't eliminate the researcher; it elevates them. The focus shifts from the mechanical act of typing to the intellectual work of verifying, refining, and engaging with the text from the very first pass.

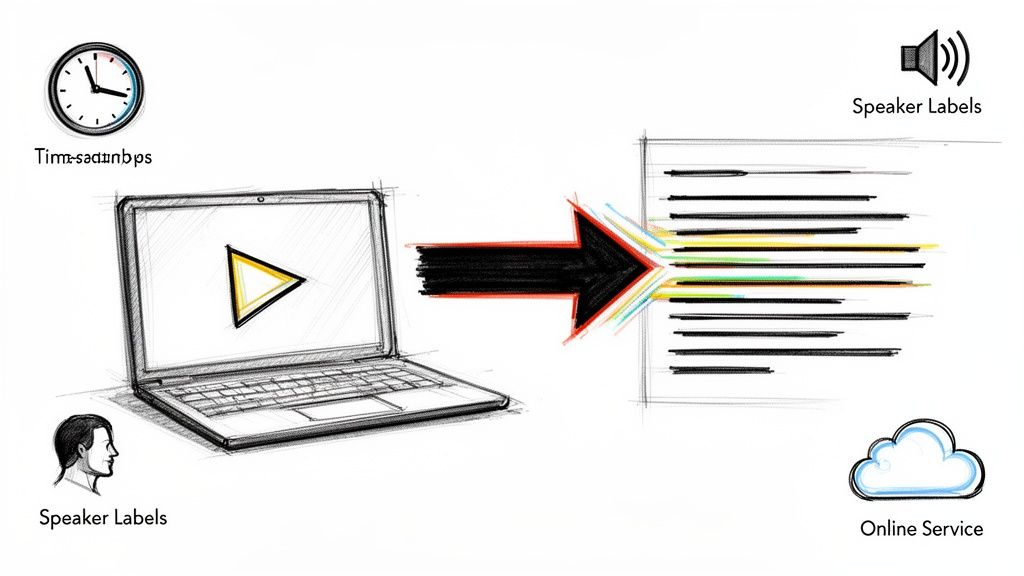

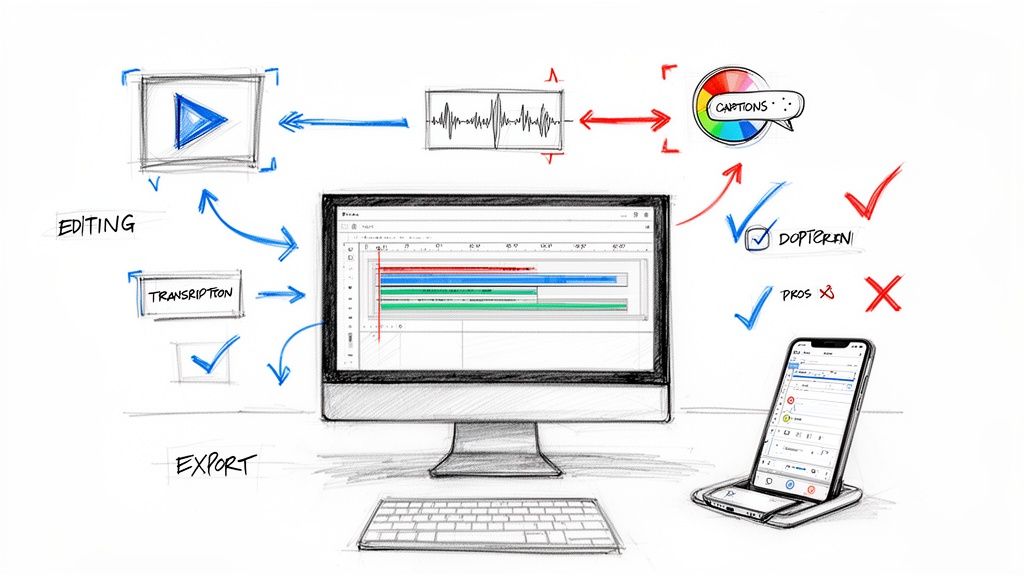

The process is refreshingly simple:

- Upload Audio: Just drop your audio or video file into an AI transcription service.

- AI Generates Draft: The AI churns through the file and kicks out a full transcript, often with timestamps and initial speaker labels already in place.

- Researcher Reviews and Refines: Now, you listen along while reading the draft, catching any mistakes in jargon, correcting speaker names, or clarifying ambiguous phrases.

What used to take hours of tedious typing now becomes a much shorter, more focused editing session. This shift is happening everywhere; just as AI is changing research, it's also making other complex tasks easier, like helping people find the best AI website builders for their needs. For researchers, this new workflow frees up precious time and mental energy, letting you dive into the actual analysis almost immediately.

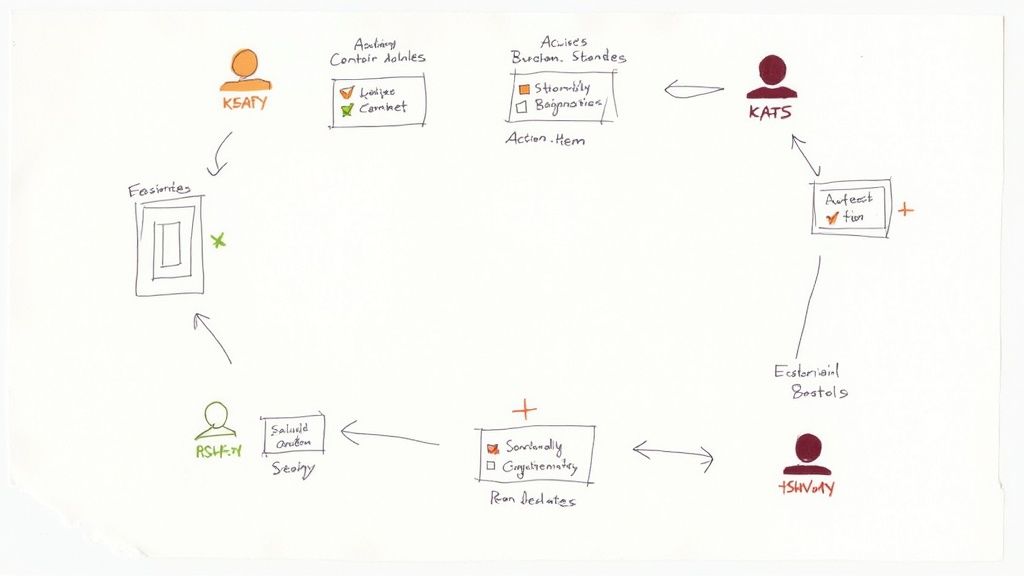

A Head-to-Head Comparison

So, which path is right for you? It really comes down to balancing your project's budget, timeline, and accuracy requirements. Here’s a quick breakdown to help you decide.

Manual vs AI-Assisted Transcription Methods

To make the choice clearer, let's compare manual, fully automated, and the hybrid AI-assisted approaches across the factors that matter most to qualitative researchers.

For most qualitative researchers, the AI-assisted or hybrid model is the clear winner. It offers the perfect blend of machine speed and human intellect, giving you a workflow that’s both incredibly efficient and academically rigorous. You get the best of both worlds without the major drawbacks of the other methods.

Essential Best Practices for High-Quality Transcripts

Creating a transcript is one thing, but creating one that’s actually ready for analysis is another ballgame entirely. The real difference comes down to a few key practices that protect the integrity of your data. Think of them as your quality control checklist—they make sure the final product is reliable, accurate, and ready for a deep dive.

When you follow a clear protocol from the start, you turn a simple text file into a valuable research asset. You’re building a foundation that prevents the kind of small inconsistencies that can quietly throw off your analysis later on.

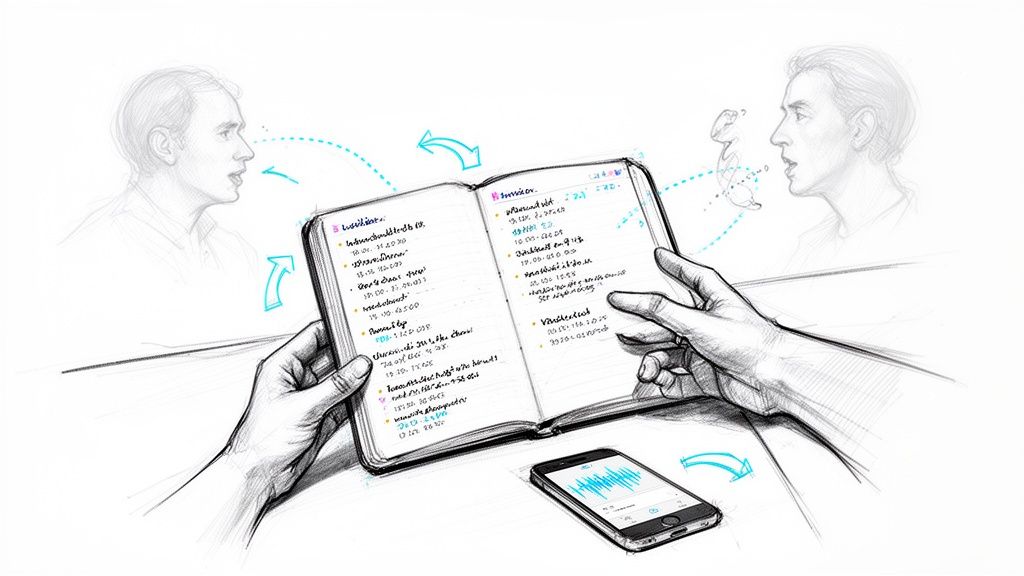

Standardize Speaker Identification

First things first: you have to know who’s talking. Clear and consistent speaker labels are absolutely essential. Without them, your transcript quickly becomes a confusing mess of dialogue, and you'll waste hours trying to figure out who said what.

Choose a simple, uniform system and stick with it for every single file.

Common approaches include:

- Interviewer: "I" or "IV"

- Participant: "P" or "P1," "P2" for multiple people

- Pseudonyms: Consistent fake names like "Anna" or "Mark"

The goal here is pure consistency. A well-labeled transcript means you can instantly pull quotes and analyze what each person contributed without having to scrub back through the audio. If you want a more detailed walkthrough, our article on how to properly transcribe an interview has some great step-by-step advice.

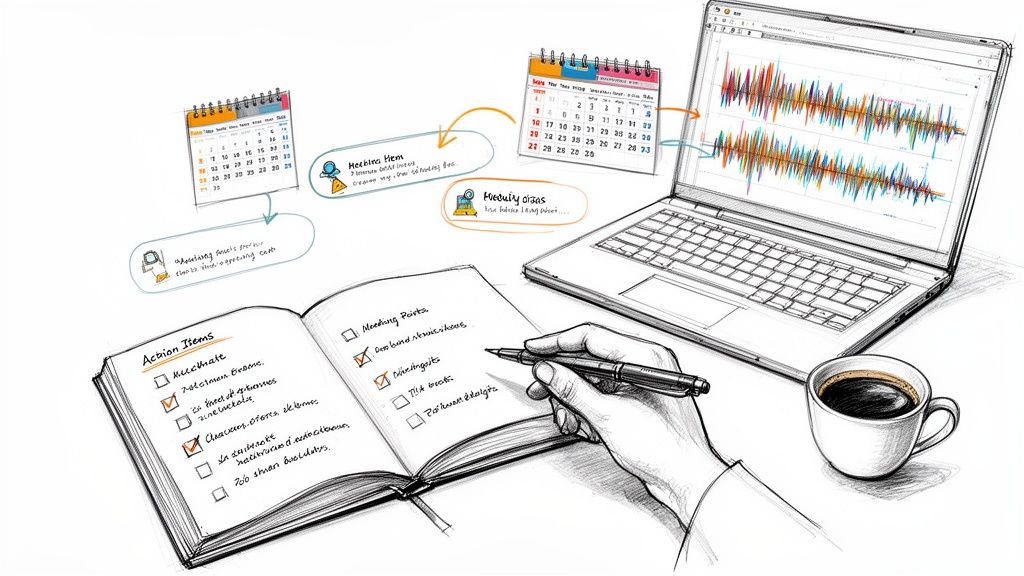

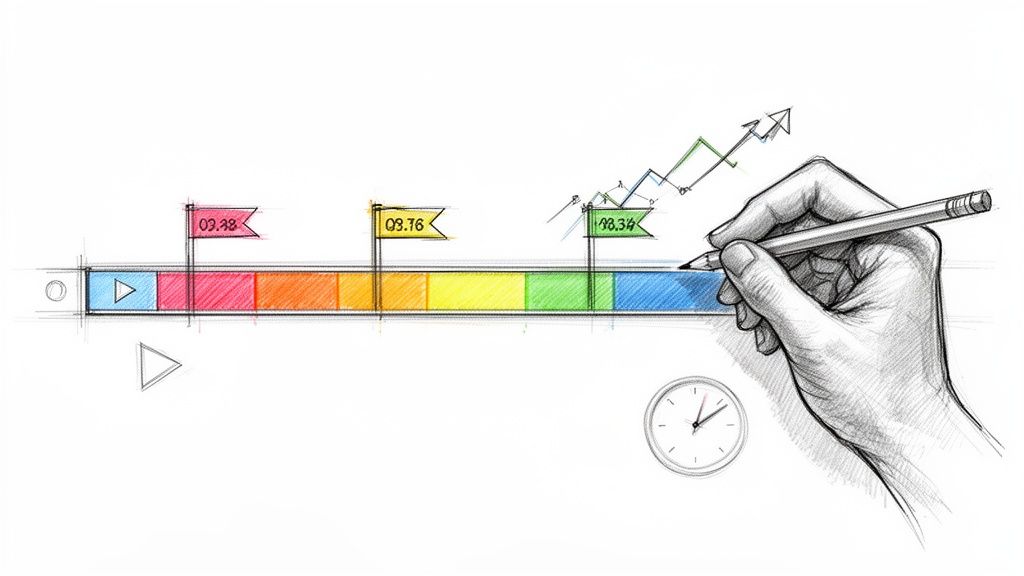

Use Timestamps and a Transcription Key

Trust me, timestamps are your best friend when it’s time to analyze your data. By dropping a timestamp (like [00:15:32]) into the text at regular intervals—say, every minute or every time the speaker changes—you create a direct lifeline back to the original audio. This makes it incredibly easy to double-check a quote or listen again for tone and emotional context.

You’ll also want to create a transcription key. This is just a simple legend that explains how you’ll handle non-verbal sounds and other quirks of human conversation.

A transcription key is basically a style guide for your transcript. It makes sure every laugh, long pause, or interruption is noted in the exact same way, which brings a much-needed level of consistency to your data.

For example, your key might look something like this:

[laughter]for audible laughing[crosstalk]when speakers talk over each other[pause]for significant silences[inaudible 00:17:10]for words you just can't make out

Having this simple document on hand ensures that anyone helping with the project—or even just reading the transcripts—knows exactly what your notations mean.

Uphold Ethical Obligations with Anonymization

In qualitative research, protecting your participants' confidentiality isn't just a good idea—it's an ethical requirement. Before you start your analysis or share any data, your transcripts must be carefully anonymized to scrub them of all personally identifiable information (PII).

This means going through and either removing or replacing sensitive details. For instance:

- Swap out real names for pseudonyms ("Jane Doe" becomes "Participant A").

- Generalize specific places ("I work at Acme Corp on 123 Main Street" becomes "I work at [Participant's Employer] in [City]").

- Take out the names of other people, like friends or family members.

This is a critical step that both protects the people who trusted you with their stories and ensures your research holds up to ethical standards. Good data management doesn't stop at transcription; how you store and organize this information is just as important. For a wider look at handling sensitive data, these knowledge management best practices offer some valuable principles.

How to Navigate Common Transcription Challenges

No matter how carefully you prepare, the reality of audio recording is often messy. You'll run into background noise, people talking over each other, and thick accents that can turn a simple job into a real puzzle. Learning to navigate these hurdles is essential for producing transcription in qualitative research that you can actually trust.

Getting through these challenges isn't about finding a magic solution. It’s about having a solid strategy for dealing with less-than-perfect audio. Thinking ahead and solving these problems as they come up protects the integrity of your research right from the start.

Handling Poor Audio Quality

Poor audio is probably the most common headache researchers deal with. A microphone that’s too far away, the low hum of an air conditioner, or the clatter of a coffee shop can easily drown out what's being said.

When you hit a patch of audio you can't make out, your first impulse might be to just take a guess. Don't do it. A better approach is to work through it methodically:

- Listen again (and again): Play that tricky section back a few times. Try it at different speeds and volumes—slowing the audio down can sometimes make the words pop.

- Get good headphones: A quality pair of noise-canceling headphones is a must-have. They're fantastic for isolating speech from all the other noise.

- Mark it

[inaudible]: If a word or phrase is truly impossible to understand after a few tries, just tag it. It is always better to admit something is missing than to make something up. For instance: "I felt that the project was[inaudible 00:21:14]."

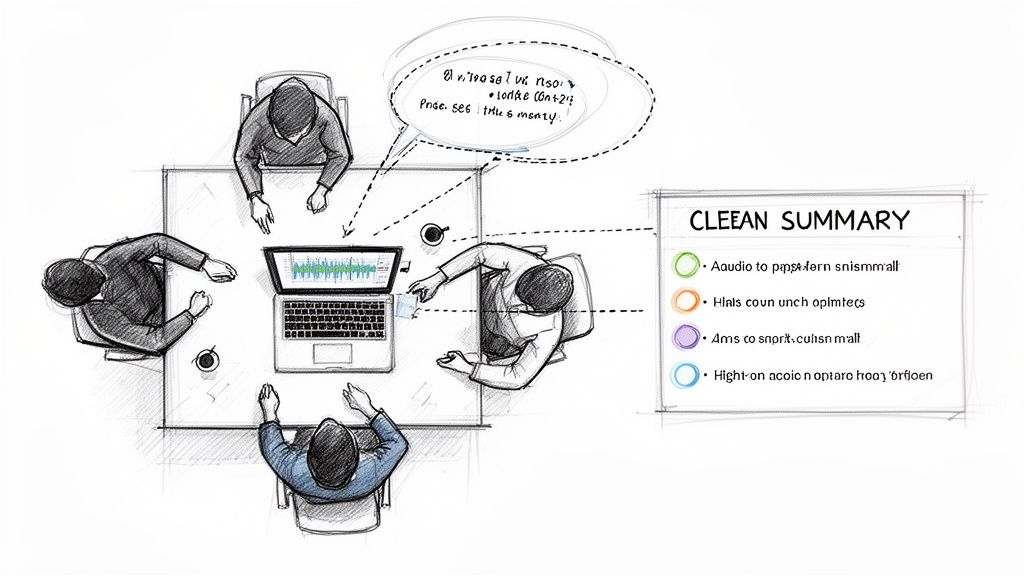

Managing Multiple and Overlapping Speakers

Focus groups and interviews with several people can yield incredibly rich data. They can also create absolute chaos when everyone starts talking at once. This is where consistent speaker labels and a clear system for noting crosstalk are lifesavers.

When multiple voices collide, your goal is to capture as much as you can without making the transcript a confusing mess. If you have to choose, prioritize clarity over trying to document every single overlapping sound.

A simple tag like [crosstalk] in your transcription key can mark where the overlap happens. From there, you can try to transcribe what each person said on a new line right after the tag. This keeps the document clean and still shows the conversational dynamic, preventing it from becoming a tangled block of text.

Dealing with Accents and Technical Jargon

Strong accents and highly specific jargon can easily stump even the best AI transcription tools. These models are trained on huge amounts of data, but they often lack exposure to a particular dialect or the niche vocabulary of a specific field.

This is where a human researcher's insight is truly invaluable. When you review the transcript, you're the expert in the room.

- Create a Glossary: Before you even start, make a quick list of key technical terms, acronyms, or proper names you expect to hear.

- Slow It Down: When listening to a participant with a strong accent, play the audio at a slower speed. This gives your brain a little more time to process their speech patterns.

- Human Correction is Critical: Let an AI tool do the heavy lifting on the first draft, but always be ready to jump in and make corrections. Your knowledge of the subject matter is the final, and most important, quality check. This careful review ensures the subtle but critical details aren't lost along the way.

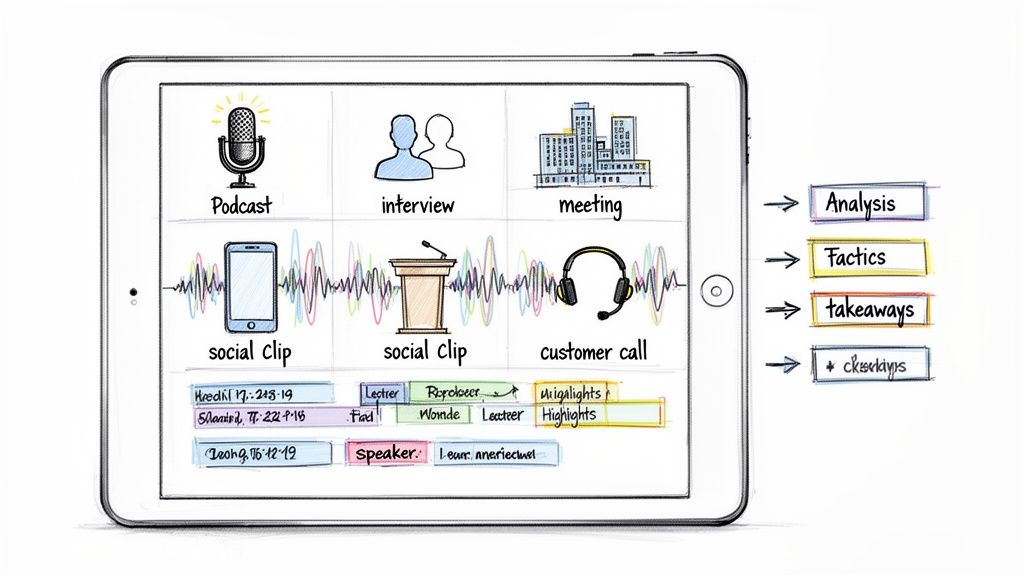

Streamlining Your Research With Whisper AI

After grappling with the common headaches of transcription, it becomes pretty obvious that your tools can either make or break your project. This is where modern AI platforms like Whisper AI step in. They’re built to tackle these very challenges head-on, turning transcription from a mind-numbing chore into a fluid part of your research.

What this really means is you spend less time hunched over a keyboard deciphering audio and more time actually thinking about your data. By automating the grunt work, you can jump straight into the good stuff—the analysis—with a lot more energy and focus.

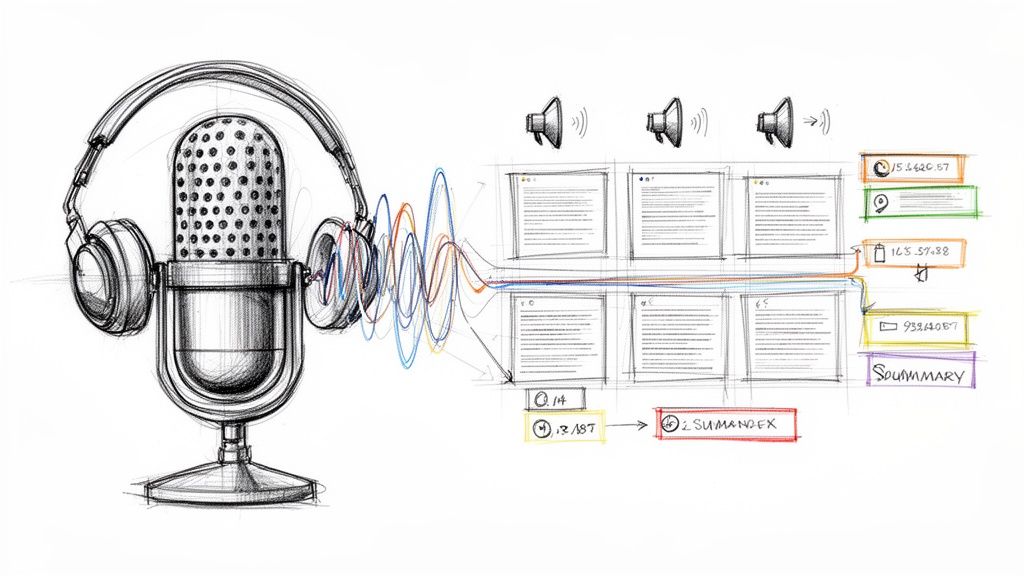

From Tedious Tasks to Automated Efficiency

Think about the biggest time-wasters in transcription in qualitative research. Manually labeling speakers and scrubbing through audio to find one specific quote? That’s hours of your life you’ll never get back. Whisper AI has practical features designed specifically for researchers to solve these exact problems.

For instance, its automated speaker identification is a lifesaver for focus group recordings. Instead of trying to guess who said what, the tool automatically tags each speaker, giving you a clean, organized transcript from the get-go.

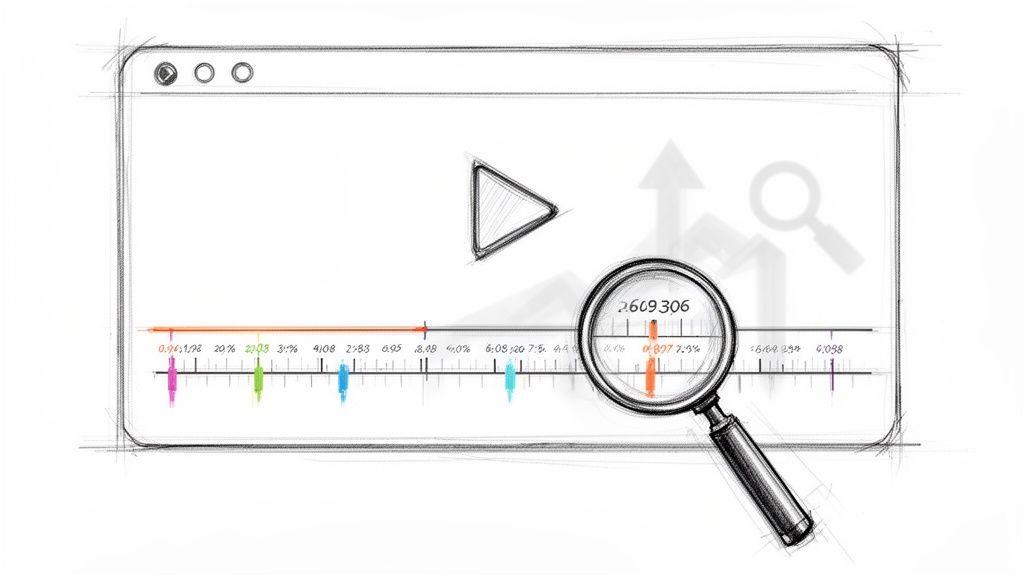

Another game-changer is the word-level timestamps. When you’re reviewing a transcript and a particular quote feels off, or you want to hear the emotional tone behind the words, you just click. That click takes you to the precise moment in the audio. It makes checking your work and ensuring accuracy incredibly fast.

Global Research and Seamless Integration

Qualitative research isn't confined to one language anymore. Projects are global, and your tools need to keep up. Whisper AI is built for this reality, accurately transcribing audio in over 92 languages. This capability blows the doors open for international studies, removing the huge hurdle of finding and vetting specialized multilingual transcribers.

By handling diverse languages and accents effectively, advanced AI tools make global research more accessible. They ensure that language is not an obstacle to uncovering rich qualitative insights from participants around the world.

When your transcript is ready, getting it into your analysis software is just as easy. You can download the text in common formats like DOCX or TXT, so it plays nicely with tools like NVivo or ATLAS.ti. This smooth handoff means no time wasted on clunky file conversions. If you want to see how it all works, there’s a great guide on how to use Whisper AI that walks you through its features.

Frequently Asked Questions

As you dive into qualitative research, you'll find that transcription is one of those areas where the practical details really matter. It's one thing to understand the theory, but quite another to manage the workflow. Let's tackle some of the most common questions that come up.

Think of this as a quick-start guide to help you navigate the process, from estimating timelines to choosing the right tools for your project.

How Long Should It Take to Transcribe One Hour of Audio?

This is the million-dollar question, and the honest answer is almost always "longer than you think." A good rule of thumb for a seasoned professional transcribing manually is about 4 to 6 hours for one hour of clear, straightforward audio.

But that's a best-case scenario. Several things can throw a wrench in that estimate:

- Audio Quality: A fuzzy recording with background noise can easily double the time needed.

- Number of Speakers: Trying to untangle a lively focus group discussion with people talking over each other? You could be looking at 8 hours or more.

- Complex Content: Highly technical language or thick accents will have you constantly hitting rewind.

This is where AI-assisted transcription really changes the game. An AI tool can produce a first draft in just a few minutes. From there, you're left with the much more manageable task of editing and proofreading, which usually takes around 1 to 2 hours per audio hour.

Can I Transcribe the Audio Myself?

Of course. Many researchers, particularly those just starting out or working with a small set of interviews, choose to do it themselves. The biggest benefit is that it forces you to become incredibly familiar with your data. You’ll catch the subtle pauses, the shifts in tone, and the hesitations that a transcript alone can't fully capture.

The downside? It's a massive time sink.

Transcribing your own interviews gives you a deep connection to the material, but you have to weigh that against the sheer number of hours it consumes. On larger projects, that time is often better spent on analysis and writing.

If you're dealing with more than a few interviews, the time commitment can quickly spiral and stall your entire project. This is the sweet spot for AI-assisted tools. They offer the best of both worlds: you still get that deep engagement with the text during the review phase, but you skip the tedious hours of initial typing.

What Is the Most Accurate Transcription Method?

For top-tier accuracy, nothing beats a hybrid approach that combines AI speed with a final human review. A skilled human transcriber can hit 99%+ accuracy, but it's a slow and costly process.

On its own, AI is impressively fast but typically lands somewhere between 85-95% accuracy. It often stumbles over unique accents, industry-specific jargon, or overlapping conversations.

The gold standard for research fuses these two methods. You let an AI service generate a fast, solid first draft. Then, a human researcher—someone who actually understands the project's context—cleans it up. This combined workflow consistently achieves 99%+ accuracy while saving an incredible amount of time.

Ready to transform your qualitative data workflow? With Whisper AI, you can get fast, accurate transcripts in over 92 languages, freeing you up to focus on what truly matters—uncovering powerful insights. Stop typing and start analyzing. Try it now and experience the future of research transcription. https://whisperbot.ai