How to Use Whisper AI for Flawless Transcription

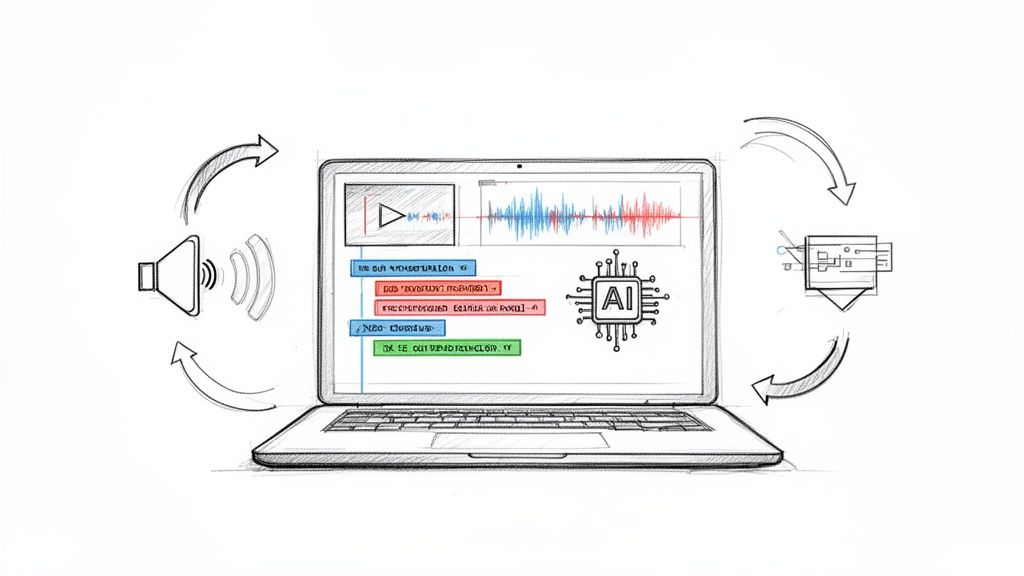

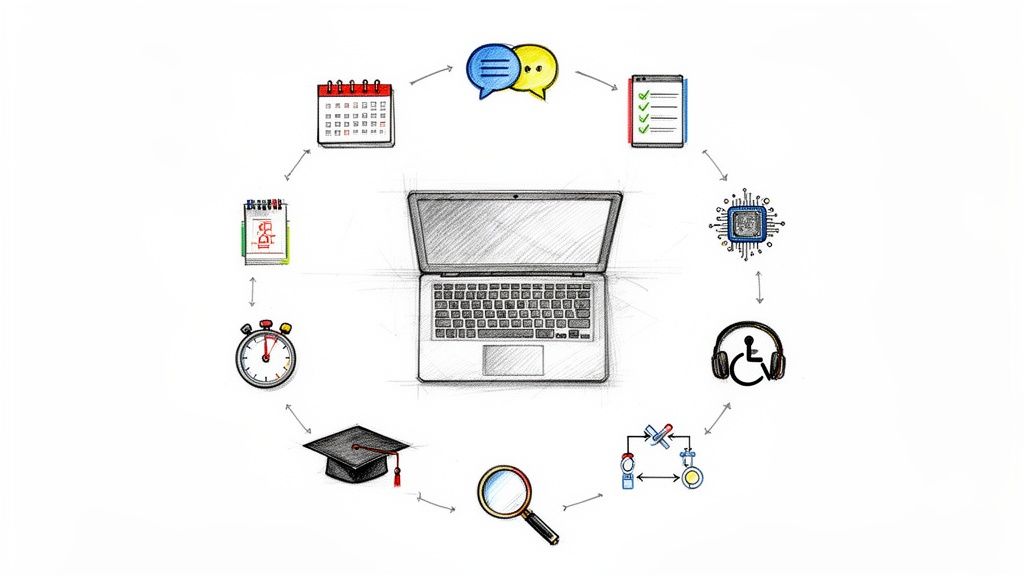

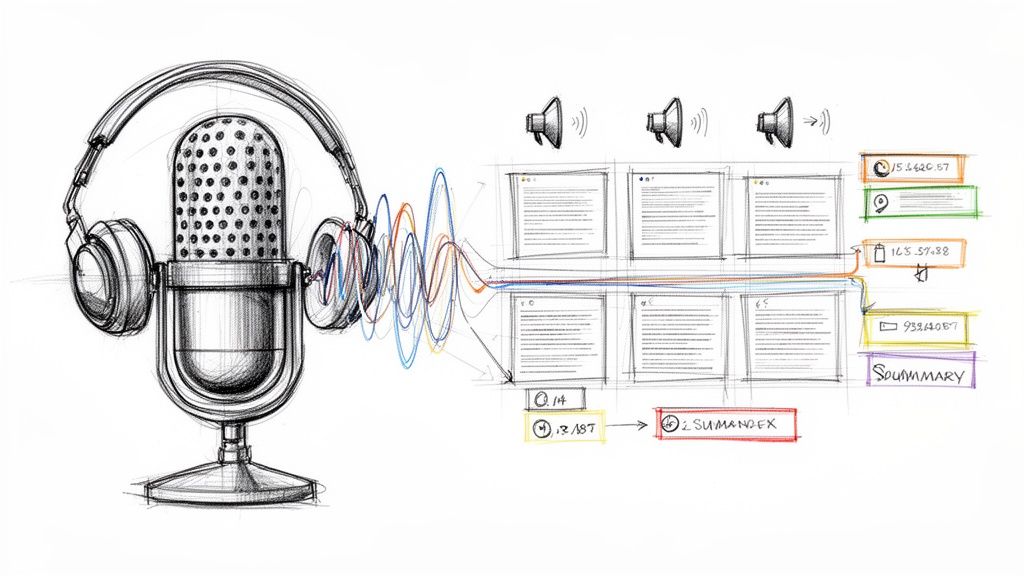

Getting started with Whisper AI really boils down to three simple actions: get your audio or video file in, pick your settings, and export the text. Having used it for countless projects, I can tell you it’s a straightforward process that turns spoken content from meetings, interviews, or lectures into a clean, accurate transcript in just a few minutes.

Your First Steps with Whisper AI

Jumping into a new tool can sometimes feel overwhelming, but Whisper AI is built to be intuitive from the get-go. This guide is based on my hands-on experience to help you get that first audio file uploaded and transcribed without any friction. We'll cover how to prep your files for the best results and walk you through the initial upload process.

The first thing you'll notice is a clean, uncluttered dashboard. The goal is to get you from point A to point B as quickly as possible, and the interface reflects that.

This kind of design means you can start your work immediately without needing to sit through lengthy tutorials. It makes the tech accessible whether you're a student transcribing a lecture or a market researcher analyzing focus groups.

So, What Makes It Work So Well?

The real magic behind Whisper AI is the sheer scale of its training. When OpenAI first released the model back in 2022, they trained it on a massive dataset—a staggering 680,000 hours of supervised audio from across the web. This data covered multiple languages and tasks, which is why Whisper is so good at understanding different accents, industry jargon, and even less-than-perfect audio. It can accurately transcribe in 98 different languages.

Unlike the old dictation software that required you to "train" it to your voice, this thing works right out of the box. From my own use, I can confirm it's already learned from such a diverse range of speakers that it can handle just about anything you throw at it.

"Modern AI-powered tools like Whisper are a different beast. They use large neural networks trained on hundreds of thousands of hours of diverse audio and text. They don’t need training... They just work, straight out of the box, for a wide range of accents, languages, and speaking styles."

This power means you get a transcript that understands context, which helps it navigate tricky phrasing and ambiguity far better than older systems ever could. For anyone who needs to convert speech to text, this is a game-changer.

To give you a clearer picture, here's a quick rundown of what Whisper AI brings to the table.

Whisper AI Core Features at a Glance

This table summarizes the main capabilities you'll be working with.

These core functions are the foundation of a much faster and more efficient transcription workflow.

Prepping for Your First Transcription

Before you hit that upload button, a little prep work can make a big difference. I've learned that the quality of your output is directly linked to the quality of your input.

Here are a few quick tips I always recommend based on my experience:

- Prioritize Clean Audio: If you can, try to minimize background noise. A clear recording from a one-on-one interview will always yield a better result than a chaotic meeting with people talking over each other.

- Check Your File Format: Whisper AI handles most common formats like MP3, MP4, WAV, and M4A. A quick check beforehand ensures you won't run into any compatibility issues.

- Have Some Context: Knowing who was speaking and the general topics will help you spot-check the final transcript much faster.

If you want a solid primer on the basics, understanding how to create a transcript from any audio file with AI tools is a great place to start. With these fundamentals down, you're well on your way to mastering Whisper AI.

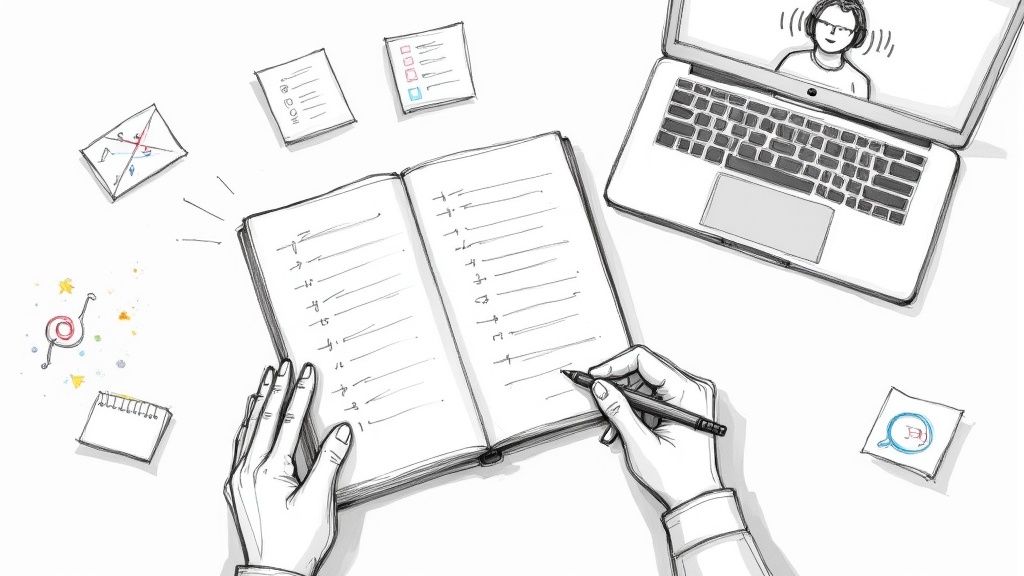

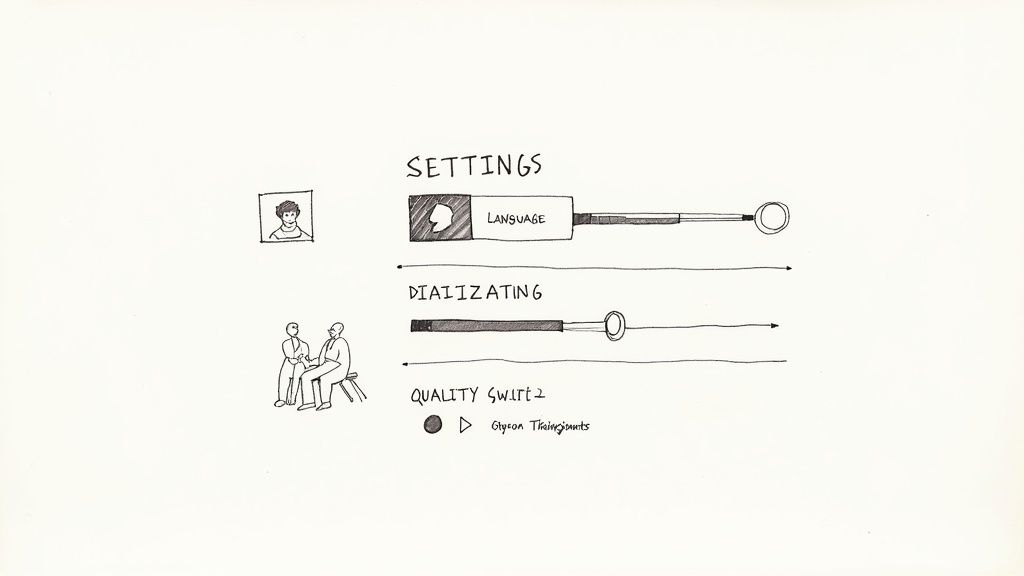

Dialing in Your Transcription Settings

Once your file is uploaded, you’ve reached the most important part of the process—the part that dictates the quality of your final transcript. Getting the most out of Whisper AI isn't about just hitting "transcribe"; it's about giving the AI the right instructions before it starts. Think of it as briefing a human assistant; the clearer your directions, the better the result.

Sure, you can rely on the defaults, and they're often pretty good. But in my experience, spending just a few seconds tweaking these settings can be the difference between getting a rough draft that needs a ton of cleanup and a polished document that’s ready to go.

Lock in the Language for Pinpoint Accuracy

Whisper is incredibly good at auto-detecting languages, but I always recommend manually setting it if you can. It’s a tiny step that pays off big time. If you know for a fact the recording is in German, just select German.

This simple action primes the model, essentially telling it which dictionary to use. It prevents the AI from getting confused by regional accents or technical jargon and misinterpreting it as a completely different language. It’s a two-second pro-tip that has saved me countless minutes of fixing bizarre transcription errors.

This level of precision is why Whisper has such a low word error rate (WER). The data speaks for itself: 4 languages have a WER under 5%, with another 9 languages sitting between 5% and 10%. That's a tiny margin of error, and setting the language helps you get there. If you're curious about the numbers, you can find more details on OpenAI's performance metrics on pihappiness.com.

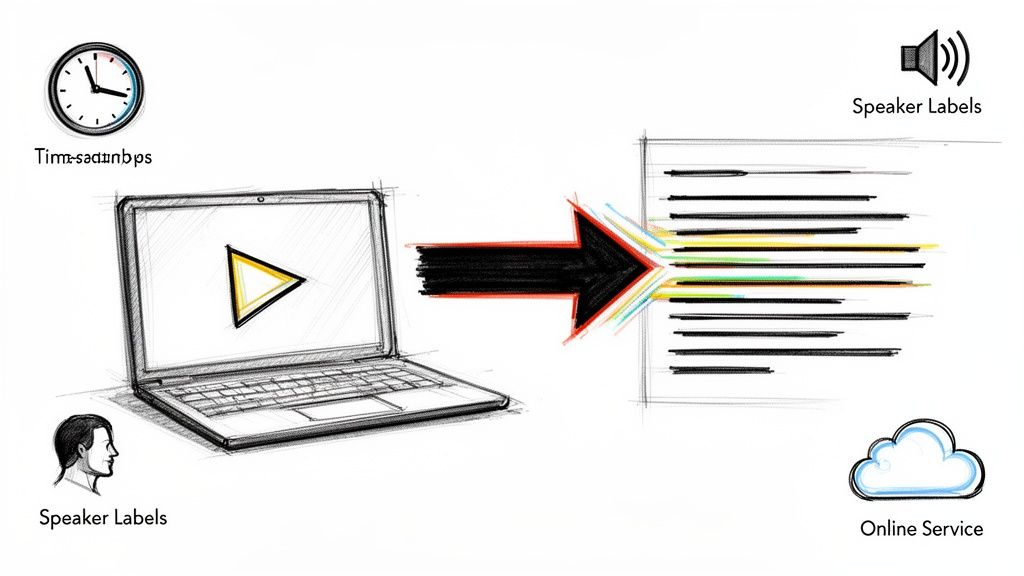

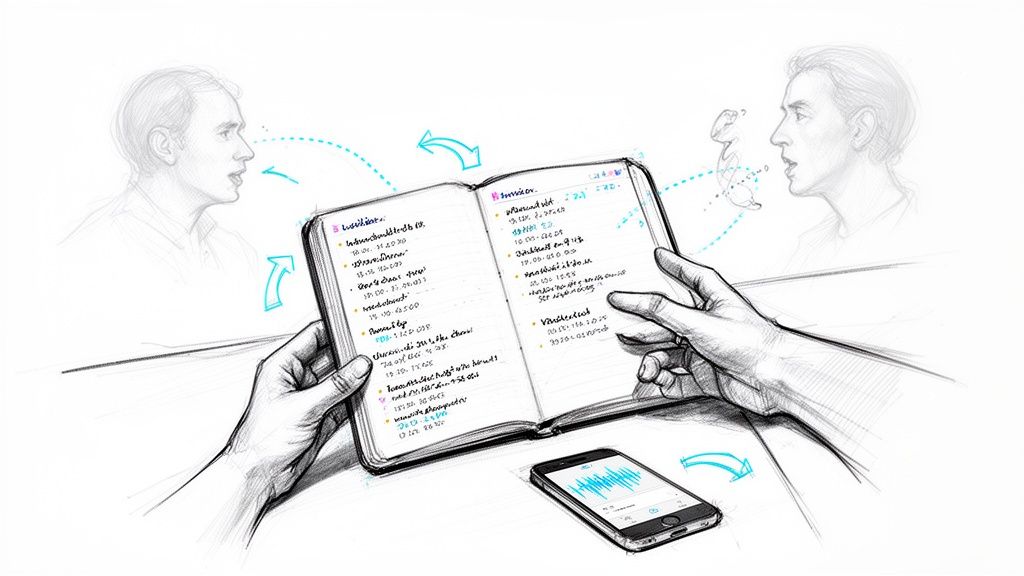

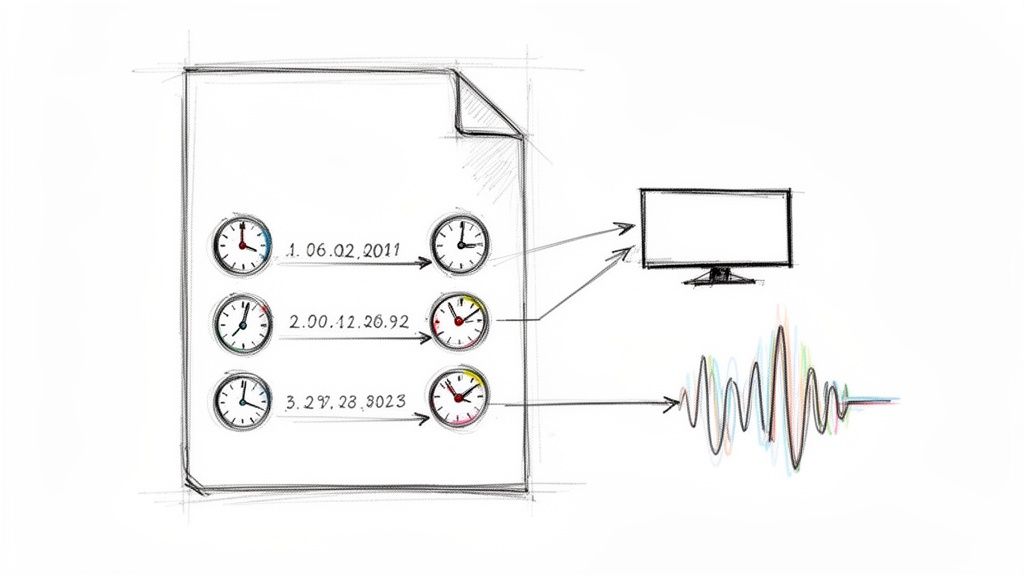

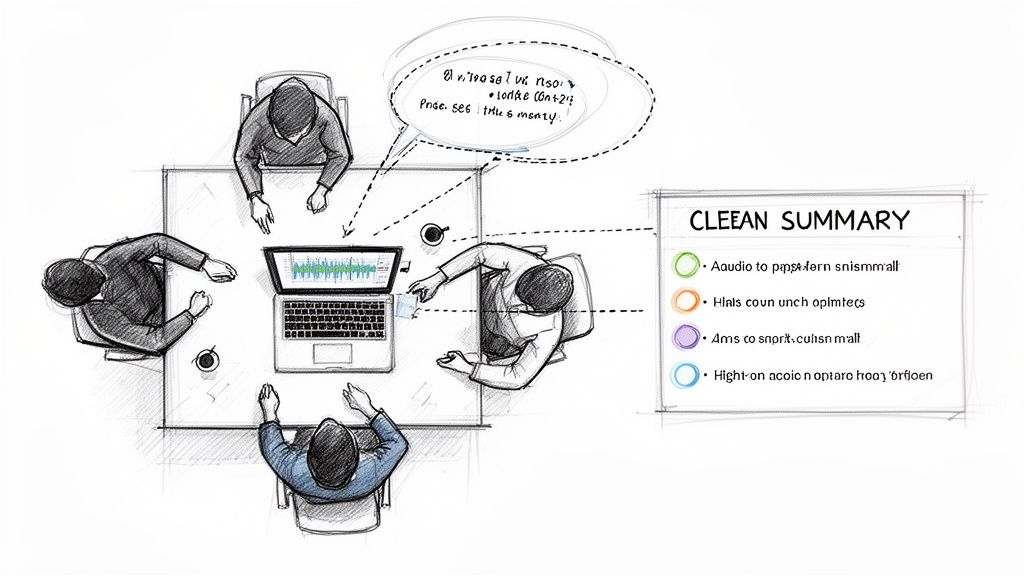

Tell Who's Talking with Speaker Diarization

Ever tried reading a meeting transcript where you can't tell who said what? It’s basically useless—just a confusing wall of text. That’s where speaker diarization comes in. You might see it labeled as "speaker detection" or "speaker labels," but it all does the same magic.

Flick this switch, and Whisper analyzes the unique vocal fingerprints in the audio. It then tags each part of the dialogue with a label like "Speaker 1" or "Speaker 2."

For anyone transcribing interviews, podcasts, or team meetings, this isn’t just a nice-to-have; it’s a must. It transforms a flat script into a structured conversation that actually makes sense.

Imagine you're cutting up a podcast episode with two hosts and a guest. Without speaker labels, you’d be stuck listening back to the audio repeatedly just to figure out who’s talking. With diarization, the transcript arrives perfectly organized, ready for you to pull quotes or write show notes.

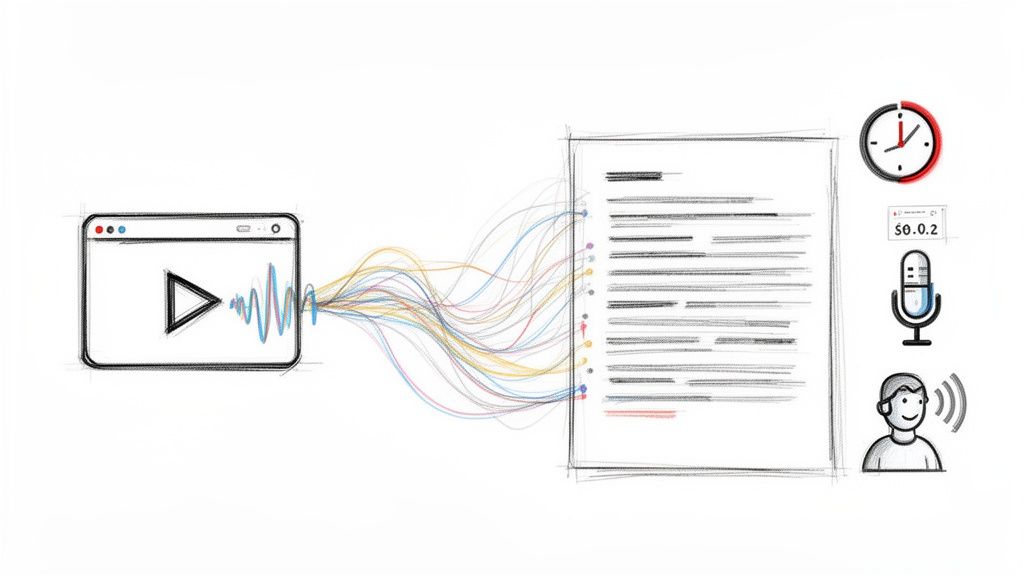

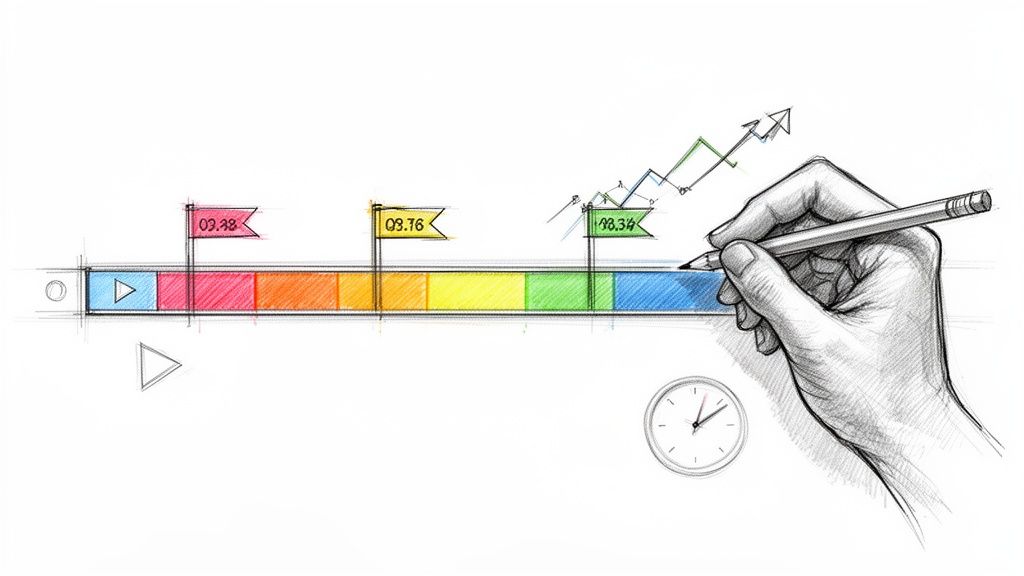

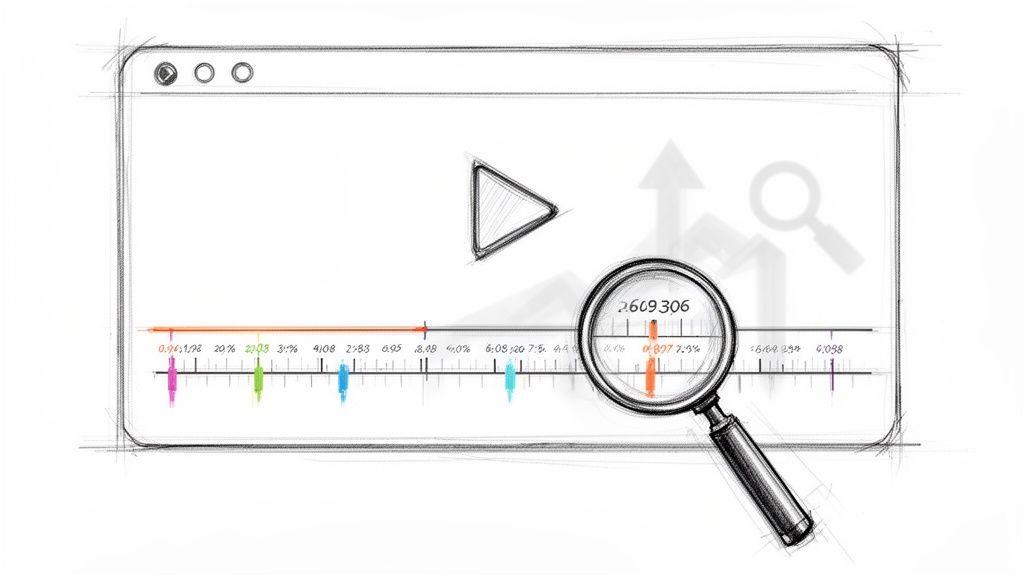

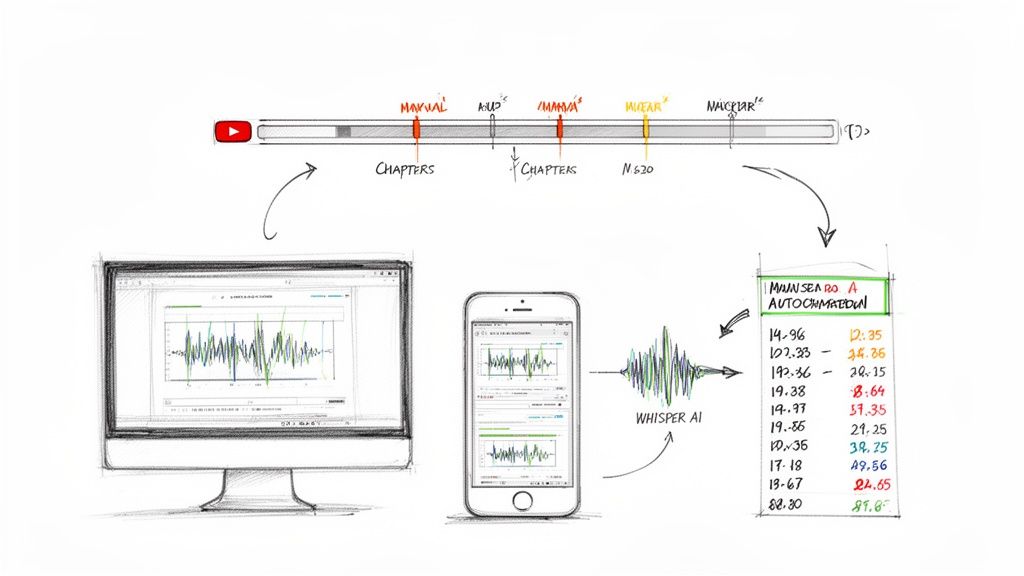

Why Timestamps Are a Secret Weapon

The last setting I never skip is timestamps. Toggling this on embeds timecodes directly into the text, linking every word to its exact spot in the audio or video. It sounds minor, but in practice, it’s a huge time-saver.

Here’s how it helps in the real world:

- Video Editors: Need to find that perfect soundbite for a social media clip? Just search the transcript, find the phrase, and the timestamp takes you right to that moment in your video editor. No more endless scrubbing.

- Researchers: When you’re analyzing interviews, you can instantly jump to the original audio to check a speaker's tone or inflection on a key quote. It adds a whole new layer of context to your work.

- Content Creators: Timestamps make creating captions (like SRT or VTT files) a breeze. The text is already synced to the right timing, making your videos more accessible and engaging without the extra work.

By taking a moment to dial in these three settings—language, speakers, and timestamps—you’re not just transcribing. You're creating a smart, organized, and genuinely useful asset tailored to exactly what you need.

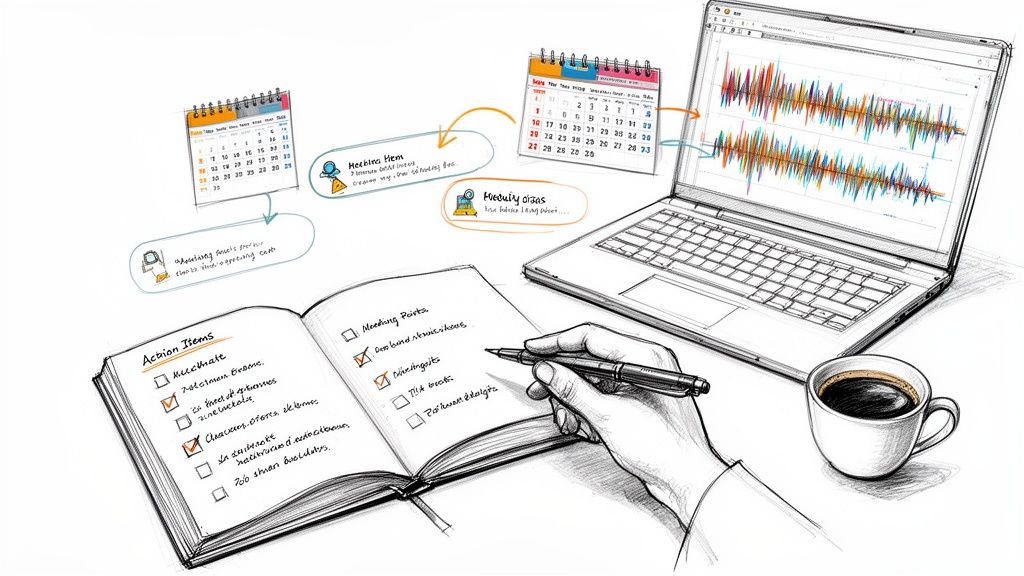

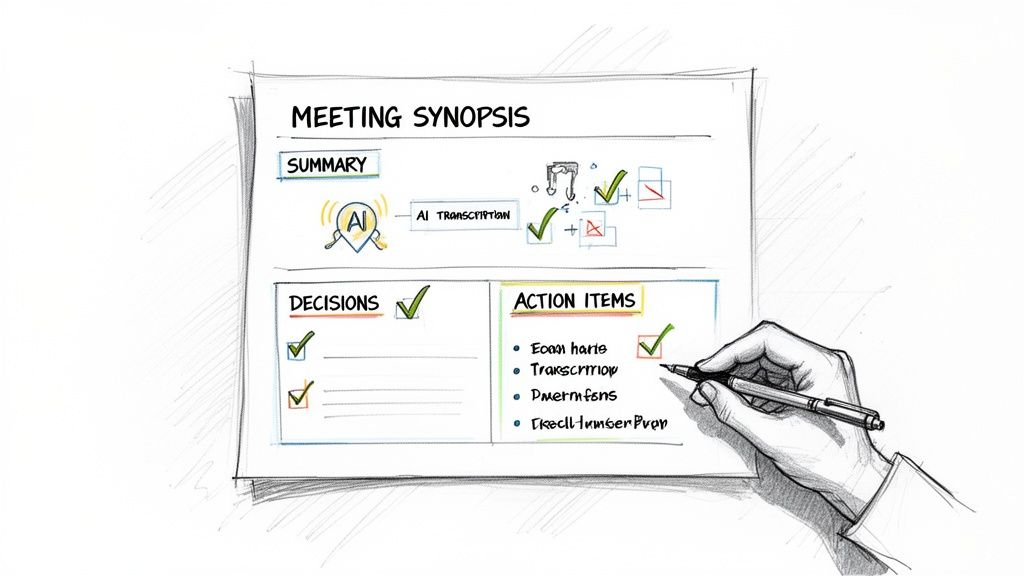

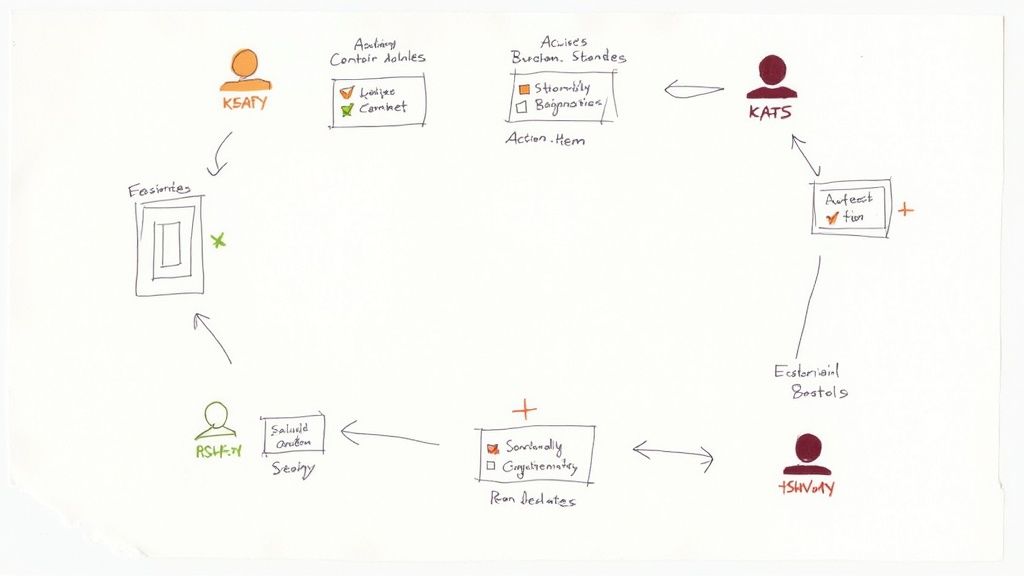

8. Uncovering Deeper Insights from Your Audio

A raw transcript is just the beginning. The real magic happens when you transform that text into tangible, usable intelligence. It's about shifting from just having the words to truly understanding what they mean.

Think of it this way: instead of just getting a printout of a conversation, you're getting a full-blown analysis. One of the quickest ways I do this is by generating an instant summary. Why reread an hour-long lecture or a rambling project meeting when I can get the core ideas in seconds? It's a huge time-saver.

This is a lifesaver for students cramming for an exam or a project manager trying to catch up on a meeting they missed. The AI cuts through the noise and gives you the key takeaways in a clean, simple format.

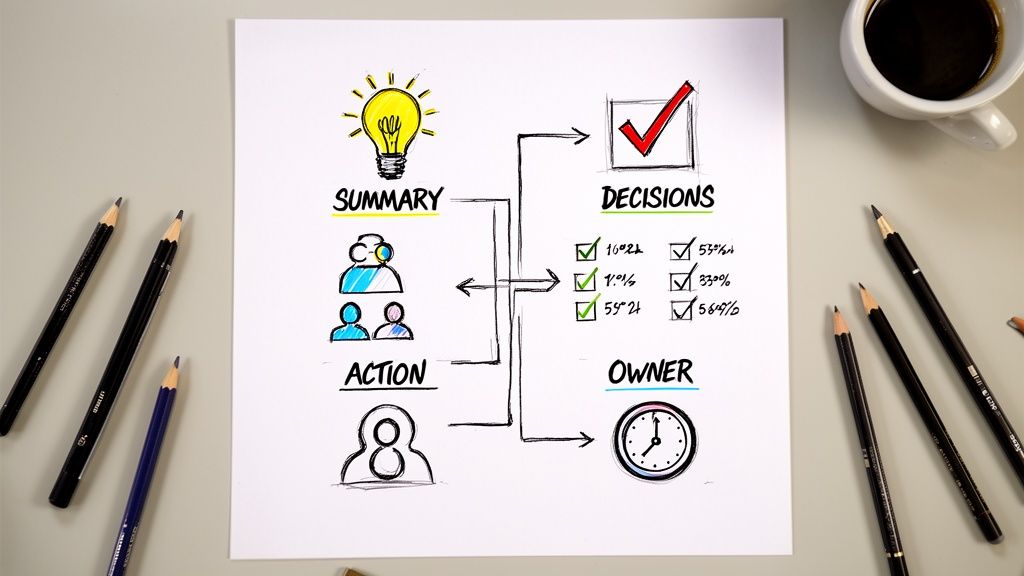

From Summaries to Actionable Highlights

While summaries provide the 30,000-foot view, highlights let you zoom in on the moments that truly matter. It’s like having an assistant who's already gone through your audio and pulled out all the best quotes, critical stats, or must-do action items.

This feature is incredibly practical for a lot of different people:

- Journalists and Researchers: Find that perfect, impactful quote for your article or study without scrubbing through hours of tape.

- Content Marketers: Easily pull short, punchy clips from a long podcast to create engaging social media posts.

- Business Teams: Quickly isolate who agreed to do what by when during a brainstorming session.

Whisper AI automatically flags these key moments for you. You don't have to hunt for the needle in the haystack anymore—it's handed to you, ready to go.

Using Follow-Up Questions to Dig Deeper

Okay, this is where things get really interesting. Once your transcript is ready, you can start treating it like your own personal search engine. Instead of rereading the whole thing, you can just ask it direct questions to find exactly what you need.

Let’s say you just transcribed a bunch of customer feedback calls. You could ask things like:

- "What were the customer's main pain points?"

- "Summarize all the positive feedback."

- "Did anyone mention our competitors by name?"

This completely changes how you interact with your content. It’s no longer a static, one-way document; it becomes a dynamic source of information you can have a conversation with. You can explore complex ideas without having to manually sift through every single word.

The ability to ask a transcript questions in plain English is a genuine productivity multiplier. It's like having a research assistant who has memorized every second of your audio and can recall any detail instantly.

This interactive approach is a game-changer for anyone doing detailed analysis. For researchers or hiring managers, knowing how to properly dig into interview data is crucial. We've actually put together a guide with more tips on this in our article on how to analyze interview data. It’ll help you get way more out of every conversation you record.

By combining summaries, highlights, and follow-up questions, you create a powerful workflow. You start broad, drill down into the most important moments, and then interrogate the text for specific, granular insights. It’s a method that ensures you squeeze every last drop of value from your audio and video.

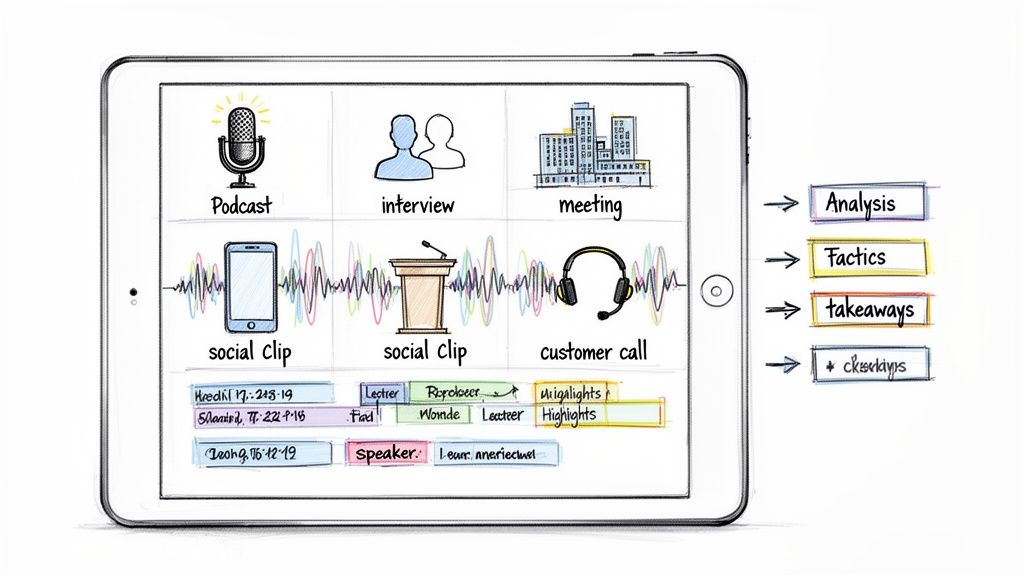

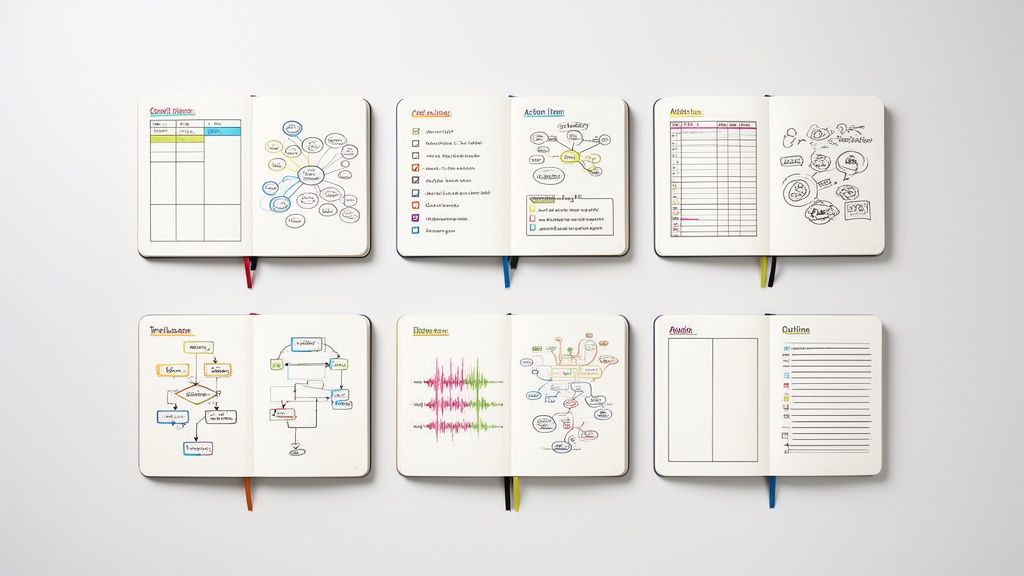

Getting the Most Out of Whisper AI in Your Field

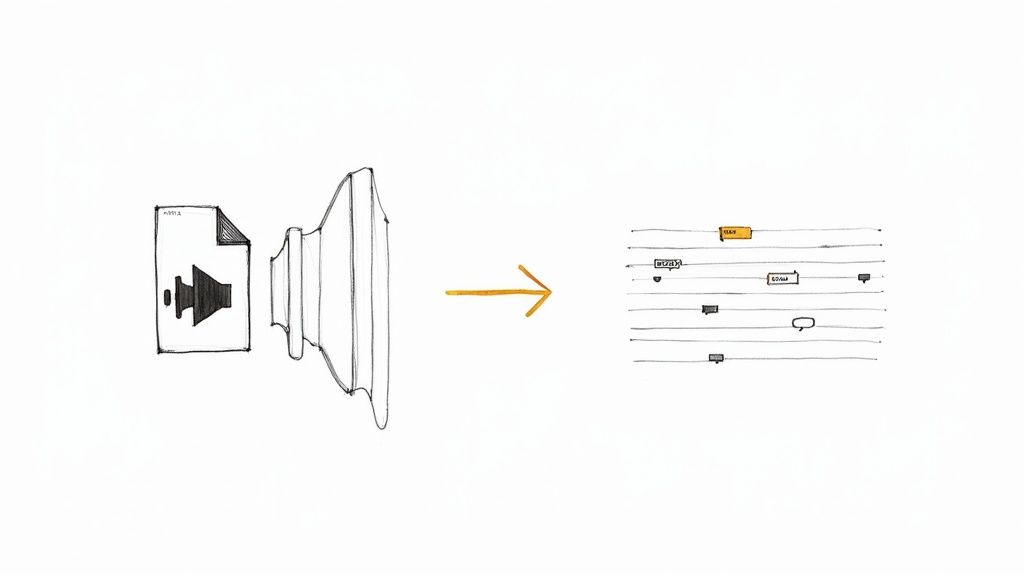

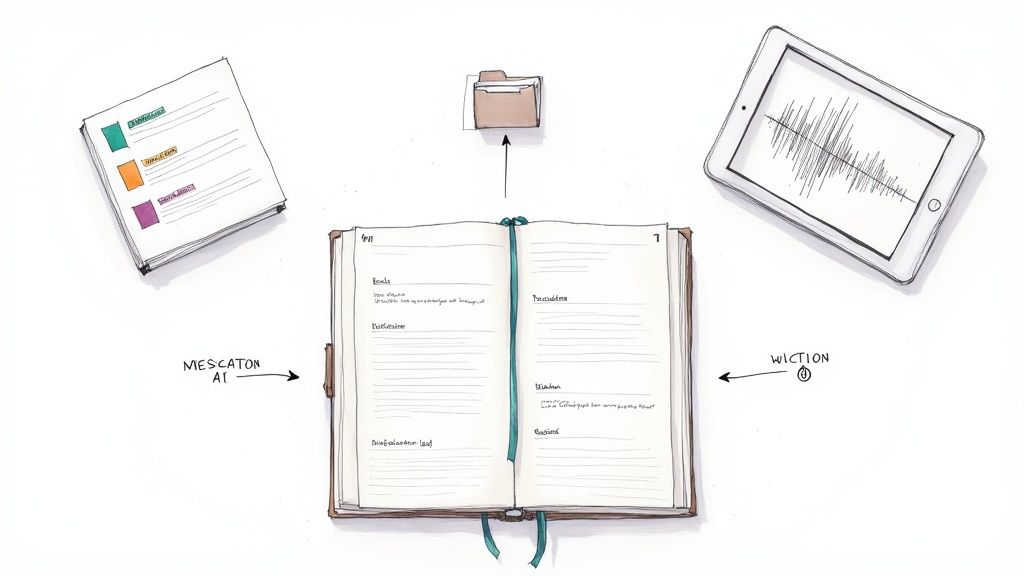

Whisper AI isn't just a generic transcription tool; its real power comes alive when you mold it to fit the specific needs of your job. Sure, uploading a file and getting a transcript is useful, but building a specialized workflow can make it an absolute game-changer. It's all about moving from a wall of text to genuine, actionable intelligence.

This is where the magic happens—turning raw audio into insights you can actually use.

This diagram shows a simple but incredibly effective process: a raw transcript gets analyzed by the AI to pull out the key points and summaries you need for your project.

Workflows for Journalists and Researchers

For a journalist staring down a deadline, every second counts. Transcribing an hour-long interview used to be a soul-crushing, multi-hour task. Now, it’s done in minutes. The trick is creating a process that gets you from raw audio to a perfect, quotable soundbite as quickly as possible.

My personal workflow for this always starts with enabling both speaker diarization and timestamps. As soon as the transcript is ready, I don't read it from start to finish. Instead, I immediately hit search (Ctrl+F or Cmd+F) for keywords on the core topic. The timestamps next to those keywords let me instantly jump to that part of the audio to verify the speaker's tone and context. This ensures every quote I pull is 100% accurate and true to the original conversation.

Researchers digging through focus group recordings can use a similar approach, but the end goal is different. You’re not just hunting for quotes; you’re trying to spot the big, overarching themes.

A powerful technique I've seen work wonders is to transcribe all your session recordings and then use follow-up questions on the entire dataset. Ask things like, "What were the most common frustrations mentioned?" or "List every instance where participants discussed pricing." This turns hours of unstructured conversation into a clean, organized thematic summary.

This method effectively transforms a pile of individual transcripts into a single, searchable knowledge base, making your qualitative analysis massively more efficient.

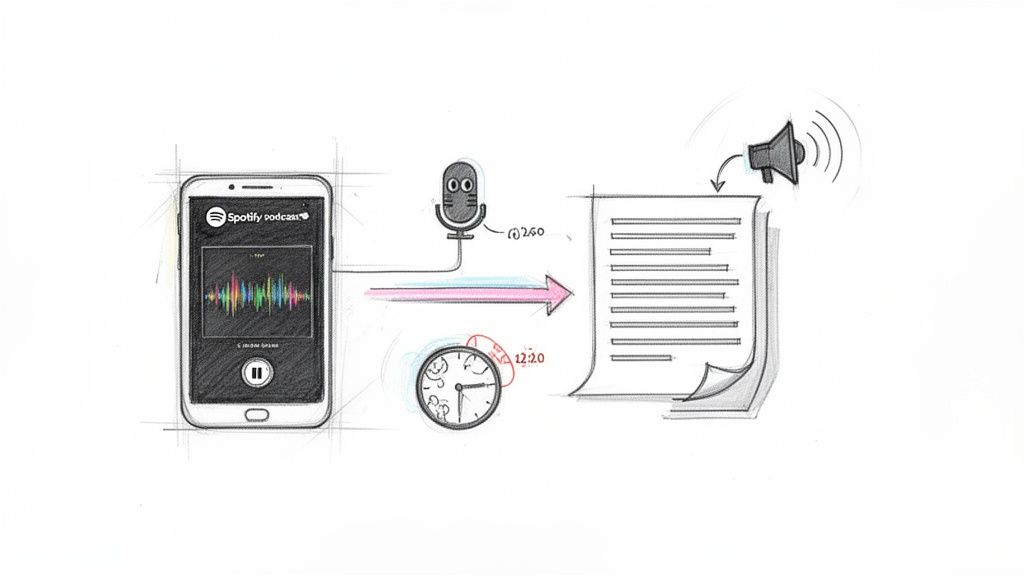

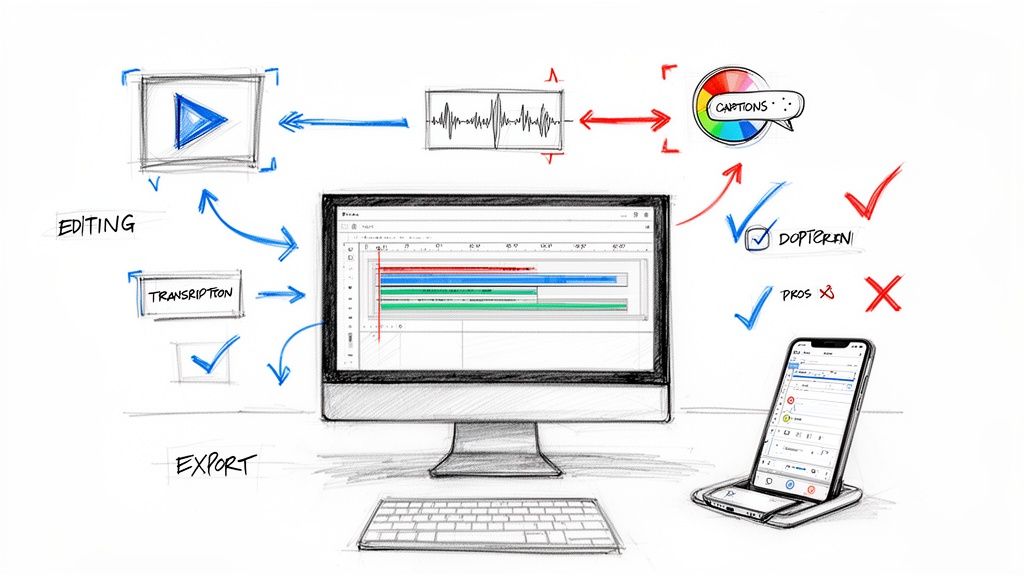

A Game-Changer for Video Editors and Podcasters

If you're a video editor or podcaster, your entire world revolves around a timeline. We’ve all been there, endlessly scrubbing through hours of footage to find that one perfect clip. It’s one of the most tedious parts of the job. When used smartly, Whisper AI can pretty much eliminate that pain.

The secret is to use the timestamped transcript as your map. Instead of just guessing and dragging the playhead in your editing software, you can simply read the transcript. When you find the exact line you need, a quick glance at the timestamp tells you precisely where it is. I’ve seen this simple habit cut footage logging time by over 50%.

And for video content, the transcript does so much more. It's your direct path to creating more accessible and engaging content.

- Subtitle Generation: Just export the transcript as an SRT or VTT file. Boom—you have frame-accurate subtitles ready for YouTube, Vimeo, or any social media platform.

- Content Repurposing: For creators looking to get more mileage out of their videos, applying these transcripts to strategies like Pro YouTube Shorts Editing for Viral Growth is essential for grabbing attention with captions.

- Show Notes and Blogs: Podcasters can take a full episode transcript and, with a few prompts, turn it into detailed show notes, a full blog post, or a week's worth of social media updates.

By building transcription right into your production process, you create a much more efficient content engine. It's about making the text do the heavy lifting for you, long after you’ve hit the stop button.

Every professional has unique challenges, and optimizing your Whisper AI workflow can make a substantial difference. Think about your biggest time-sinks and how automated transcription and analysis could solve them.

Here’s a quick breakdown of how different roles can fine-tune their approach:

Whisper AI Workflow Optimization

Ultimately, the goal is to stop thinking of Whisper AI as just a transcription service and start seeing it as an analytical partner. By tailoring its features to your daily tasks, you can save a ton of time and produce better work.

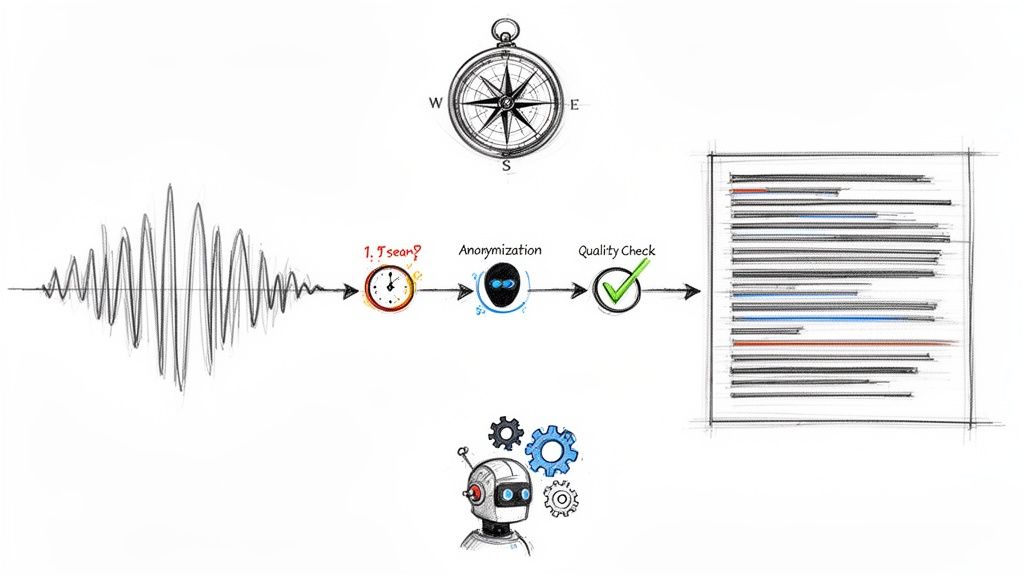

Getting Your Transcript Out and Keeping Your Data Safe

Once the AI has done its magic, you need to get that data into a format you can actually work with. This isn't just about hitting a "download" button. Choosing the right export format from the get-go is the key to a smooth workflow, saving you a ton of headaches later.

Think about what you're trying to accomplish. Are you making subtitles for a video? You'll need a file with precise timing information. Or are you just archiving an interview for your notes? In that case, a simple text file will do the trick. Whisper AI gives you a few solid options to handle these different needs.

Picking the Best Export Format for Your Project

Making the right choice here will save you from painful manual reformatting down the line. Each file type is built for a specific job, so knowing the difference is what separates a quick task from a frustrating one.

Here’s a quick rundown of the most common formats I use:

- TXT: This is your classic, no-frills text file. It's perfect when you just need the raw words without any extra data. I grab this format when I'm archiving interview notes or need to quickly paste the content into a blog post draft.

- SRT (SubRip Subtitle): The gold standard for video captions. It contains the transcribed text broken into chunks, each with a start and end timecode. You'll upload this file directly to platforms like YouTube or Vimeo to get perfectly synced captions.

- VTT (Video Text Tracks): Very similar to SRT, but it's a more modern format that gives you more control over how your captions look. Think text styling, colors, and positioning. If you need that extra bit of polish, VTT is the way to go.

For a deeper dive into how these files work with video, our guide on creating a transcription with timecode is a fantastic resource. Trust me, picking the right format first is a small step that makes a huge difference.

What About Data Privacy and Security?

Let's be real—anytime you upload files to an AI tool, especially for sensitive work, you have to think about privacy. You need to know your data is being handled responsibly. This has become even more critical now that AI is a staple in almost every business.

It’s pretty telling that over 92% of Fortune 500 companies use OpenAI APIs, the same technology that powers Whisper, for critical business functions. That kind of widespread adoption by major corporations shows a deep level of trust in the platform’s security. You can find more details on this trend in the report about AI adoption in major corporations on sqmagazine.co.uk.

Here’s the key takeaway: OpenAI’s policy for its API is crystal clear. Your data is not used to train their models unless you specifically opt-in. This means your private client interviews, confidential team meetings, and internal strategy sessions stay completely private.

Whisper AI processes your files on secure servers, and your data isn't held onto longer than necessary to get your transcription done. This commitment to privacy means you can confidently use the tool for confidential projects and stay compliant with standards like GDPR, giving you the peace of mind to make it a core part of your professional toolkit.

Your Top Questions About Whisper AI Answered

Once you start using a tool like Whisper AI, a few practical questions always pop up. It's one thing to know what it can do, but it's another to understand how it handles real-world files, tricky audio, or sensitive data. Let's get into the most common questions I hear and give you some straightforward answers based on direct experience.

What's the Real Limit on File Size?

This is usually the first thing people bump into. The official OpenAI API has a technical cap of around 25 MB, but let’s be honest, that’s not very useful for a two-hour podcast or a full-day webinar recording.

That’s why platforms built on top of Whisper, like ours, are engineered differently. We can comfortably handle audio and video files that are several hours long. We’ve seen it all, and the system is built for it.

That said, here’s a pro tip for massive files: a little optimization goes a long way. If you have a three-hour recording, try compressing it into a variable bitrate MP3 first. You won't notice a difference in sound quality, but you’ll definitely notice how much faster the upload and processing goes. Alternatively, splitting a truly massive file into one-hour chunks is a great fallback if an upload seems to be dragging its feet.

How Do I Get the Most Accurate Transcript Possible?

Whisper is incredibly good right out of the box, but the old rule of "garbage in, garbage out" still holds true. From my experience, the single biggest factor for a perfect transcript is the quality of your audio.

If you’re working with a recording from a noisy coffee shop or a windy outdoor interview, do yourself a favor and run it through an audio editor first. Nearly all of them have a one-click noise reduction feature that can clean things up immensely. That one minute of prep can save you so much time on edits later.

Here are a few other things I always recommend:

- Tell it the language. Whisper's auto-detect is good, but it's not psychic. Manually selecting the language gives the AI a head start, especially if there are heavy accents or niche terminology involved.

- Mind the mic. If you’re in control of the recording, get the microphone as close to the speaker as possible. Nothing beats clean, direct audio.

- Avoid crosstalk. In group settings, encourage people to speak one at a time. When speakers overlap, it confuses any transcription engine, human or AI.

"Modern AI-powered tools like Whisper... use large neural networks trained on hundreds of thousands of hours of diverse audio and text. They don’t need training... They just work, straight out of the box, for a wide range of accents, languages, and speaking styles."

This quote from a seasoned dictation user really captures the magic, but a little bit of audio best practice will always push the results from great to perfect.

Can It Really Handle Multiple Languages in One File?

Yes, and it’s one of the most impressive things about it. Whisper was built from the ground up to be multilingual. It can identify and transcribe different languages as they appear in the same audio file, switching between them on the fly.

This is a game-changer for so many situations:

- Global Team Meetings: A call with team members switching between English and Spanish? No problem.

- Documentaries and Media: Need subtitles for a film with interviews in three different languages? Done.

- Language Practice: Record a conversation with a language exchange partner and get a clean transcript of both languages to review.

You don't have to toggle any special settings. Just upload the file, and the AI figures it out, neatly transcribing each language as it's spoken.

Is My Data Used to Train OpenAI's Models?

This is the big one, especially for anyone dealing with client information or internal strategy. The answer completely depends on how you access Whisper.

When using the official OpenAI API, their policy is crystal clear: your data is not used for training unless you explicitly opt in. This creates a secure environment for businesses that need to maintain confidentiality. Your private meetings stay private.

However, if you're using a third-party app, you need to check their specific privacy policy. Here at Whisper AI, we treat your data as your own. Files are processed securely, and we never use them for anything other than generating your transcript and summary. We don’t store your data long-term or use it for model training, so you can work with complete peace of mind and stay compliant with standards like GDPR.

Ready to see for yourself? Whisper AI turns your audio and video into accurate, organized text in just a few clicks. Upload a file, paste a link, and let it do the heavy lifting. Experience the power of effortless transcription today.