Whisper AI: A Practical Guide to OpenAI's Speech Tech

Ever felt the frustration of trying to get accurate text from an audio file? You're not alone. For years, we've dealt with clunky transcription software that stumbles over accents, background noise, and anything more complex than a crystal-clear monologue.

That's where Whisper AI changes the game. It’s a speech recognition system from OpenAI that feels less like a tool and more like a human assistant, one who can listen to your messy, real-world audio and turn it into clean, accurate text.

What Is Whisper AI?

At its core, Whisper AI is a sophisticated automatic speech recognition (ASR) system. But that technical term doesn't quite do it justice. Forget the old dictation software that forced you to speak like a robot or spend hours "training" it to your voice. Whisper is different.

It comes ready to go, right out of the box, because it learned language by listening to a staggering 680,000 hours of diverse audio from across the internet. This massive training gives it an intuitive grasp of how we actually talk—with all our slang, accents, and interruptions.

A New Standard in Speech Recognition

Whisper's purpose is straightforward: turn speech into text. What makes it stand out is just how well it does the job, especially when conditions are less than perfect. It was built to be tough and adaptable from the ground up, handling audio that would trip up most other systems.

So, what makes it so good?

- Incredible Accuracy: It doesn't just get the easy words right. Whisper can handle technical jargon, unique names, and the subtle nuances of conversation.

- Noise Resistance: It has an uncanny ability to tune out the noise—whether that's a clattering coffee shop, background music, or street sounds—and lock onto the spoken words.

- Global Language Fluency: Thanks to its diverse training, it's incredibly skilled at understanding a wide range of global accents and regional dialects without missing a beat.

When OpenAI released Whisper in September 2022, it represented a genuine leap forward for ASR technology. It quickly became a cornerstone of OpenAI's toolkit, and they even open-sourced several models for developers to build on. If you look at the history of OpenAI's major releases, you'll see just how significant Whisper's arrival was.

How Does Whisper AI Learn to Understand Speech?

So, how does Whisper pull off its incredible accuracy? It's not about stuffing the AI with a bunch of grammar rules from a textbook. The real secret is that it learns a lot like we do: through immersion.

Imagine trying to learn a new language by listening to thousands of hours of real conversations instead of just reading a book. That's essentially what OpenAI did. They fed Whisper a staggering 680,000 hours of audio from all over the internet. This massive dataset taught it to recognize different accents, filter out background noise, and understand the natural rhythm of human speech—ums, ahs, and all. It’s a much more robust approach than older systems that would often stumble over the messiness of real-world audio.

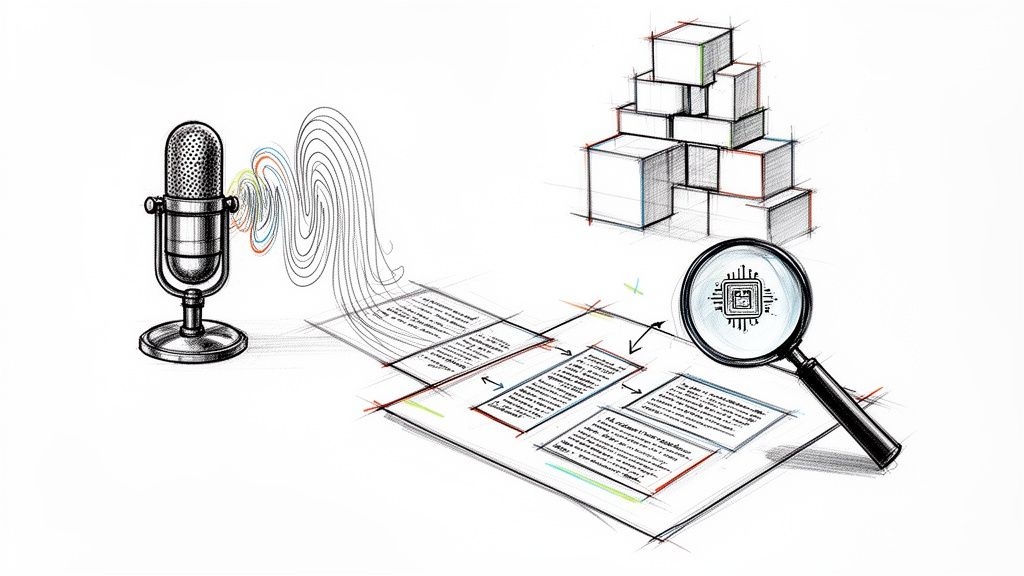

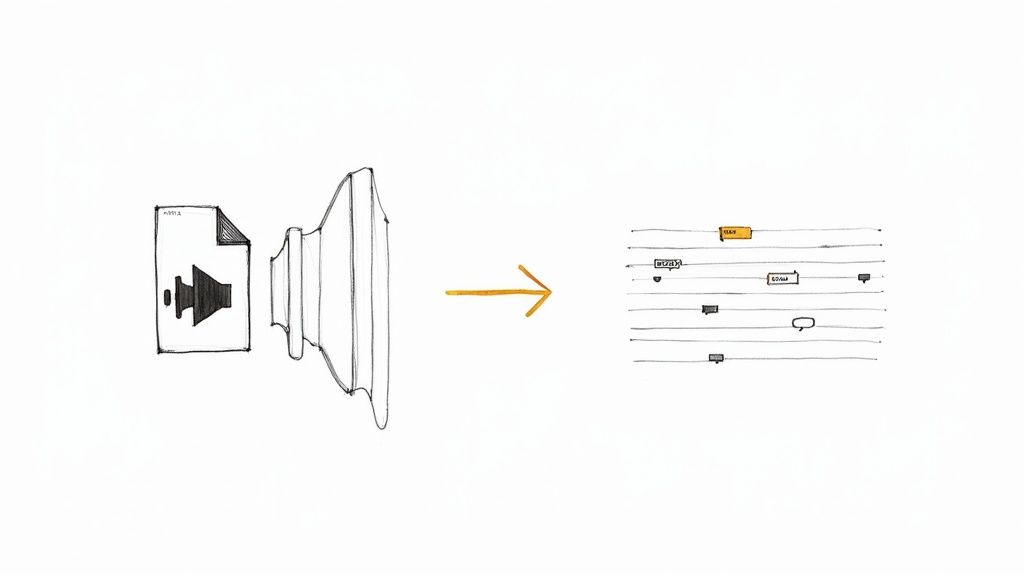

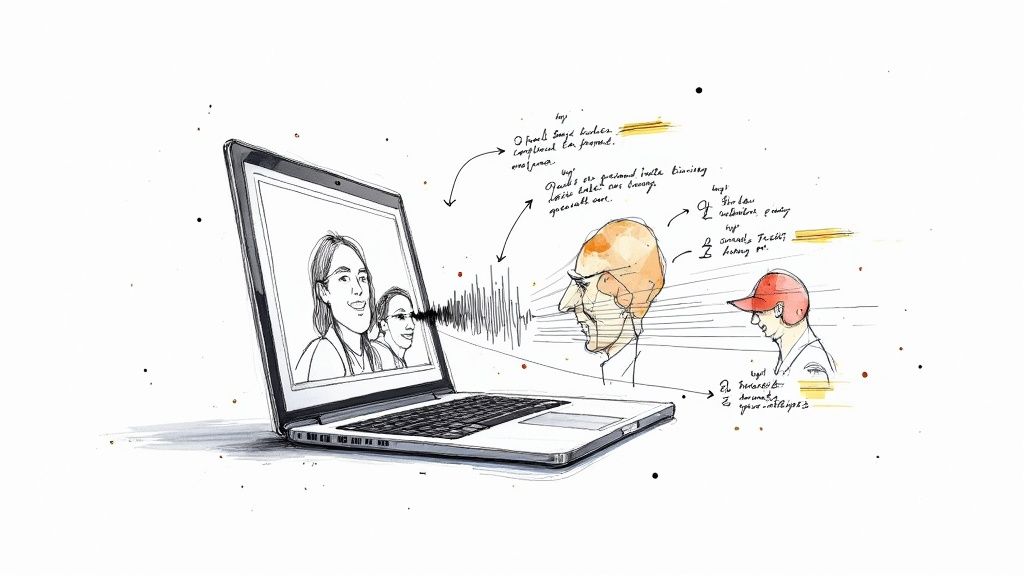

The Transformer Model: A Listener and a Writer

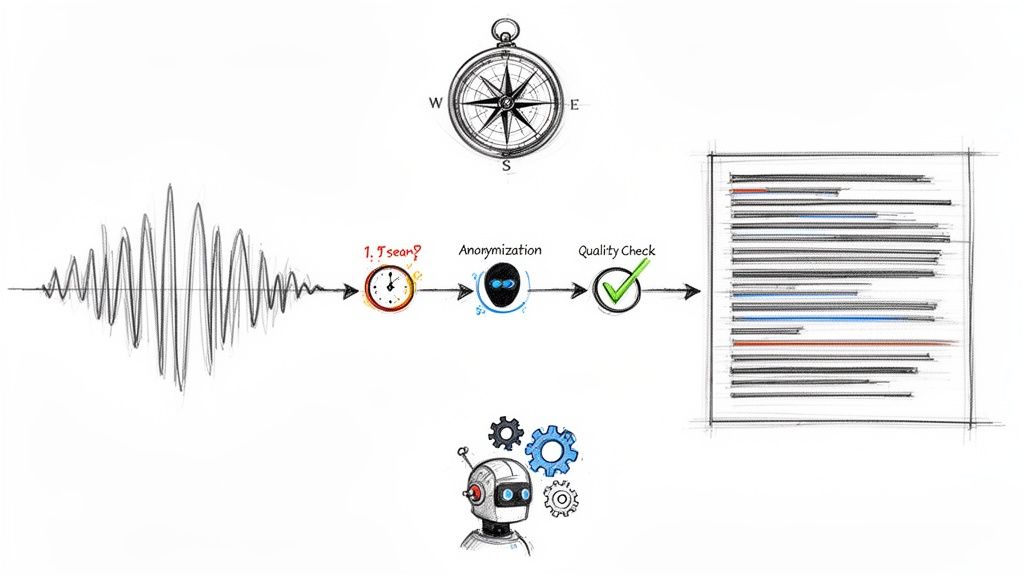

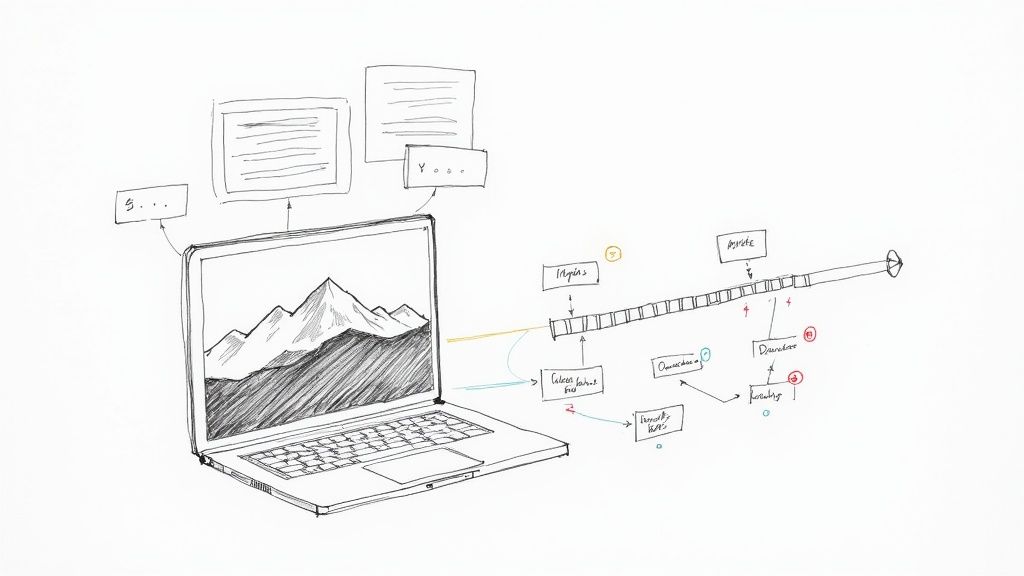

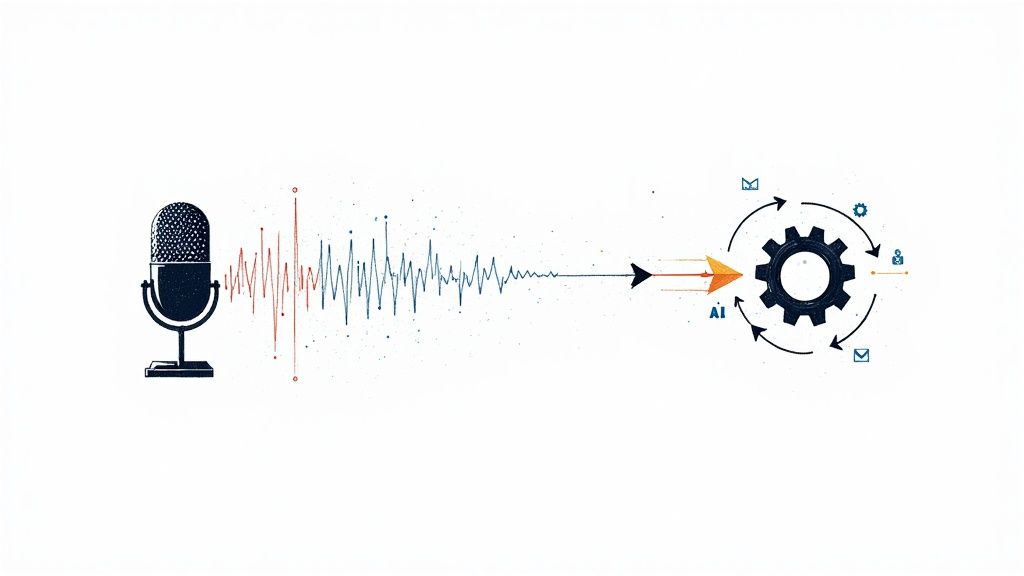

Under the hood, Whisper AI runs on a powerful neural network architecture called a Transformer. You can think of it as a highly sophisticated two-part system that works in perfect sync to turn spoken words into accurate text. It’s like having two specialists working together: one who listens intently and another who writes down what they hear.

This process is broken down into two key components:

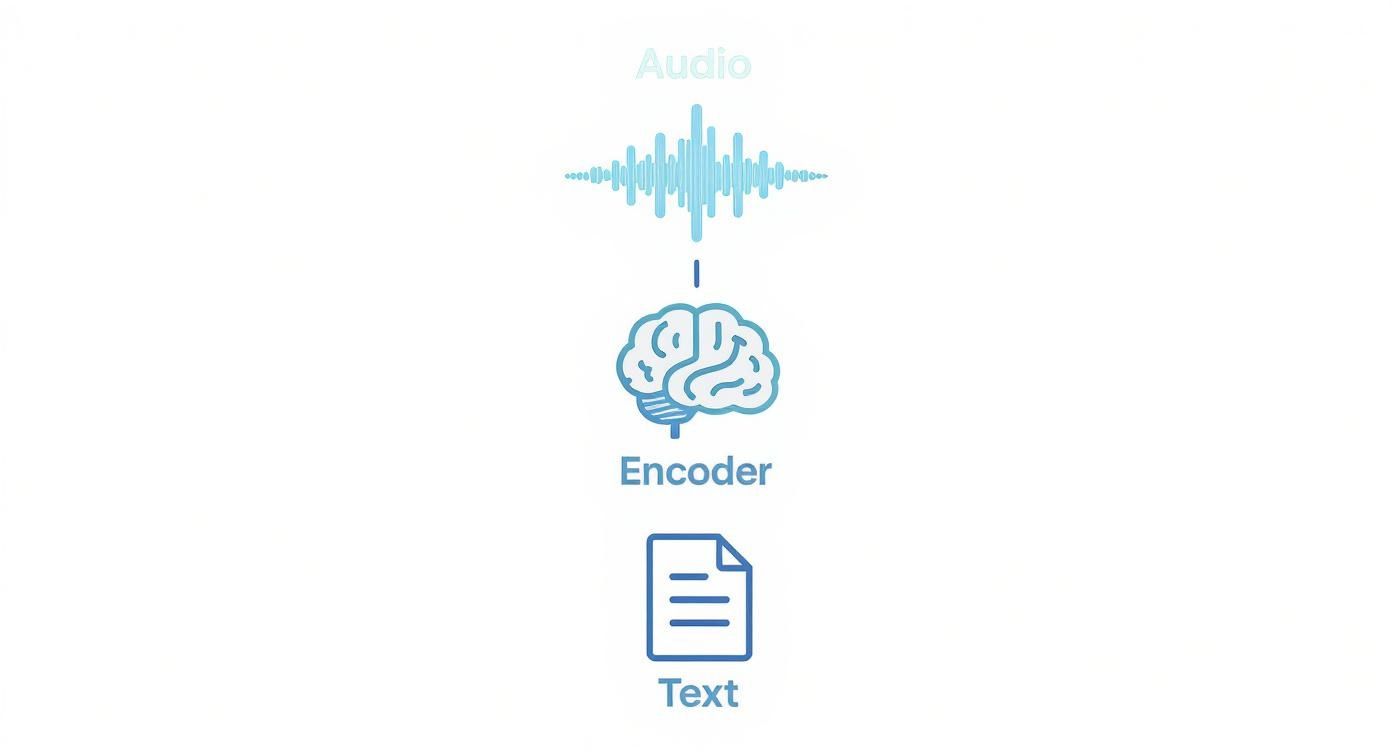

- The Encoder: This is the "listener." It takes the raw audio file and breaks it down, analyzing the sound waves to figure out the core meaning, context, and nuances. It's not just hearing noise; it's actively comprehending it.

- The Decoder: This is the "writer." It receives the rich, contextual information from the encoder and gets to work. Its job is to translate that understanding into a final, polished transcript, making smart predictions about the most likely sequence of words.

This encoder-decoder teamwork is what allows Whisper to add punctuation, make sense of ambiguous phrases, and produce text that reads like a human wrote it. It looks at the whole sentence for context, which gives it a huge leg up on older, more linear transcription methods.

A Smarter Way for AI to Learn

This approach marks a huge step forward for AI. Instead of being locked into a rigid set of pre-programmed rules, Whisper’s design lets it learn in a much more fluid and independent way. That’s why it’s so good at handling different accents or a noisy café in the background without needing to be specifically trained for every single scenario. You can find more details about this kind of adaptive AI on Larksuite.com.

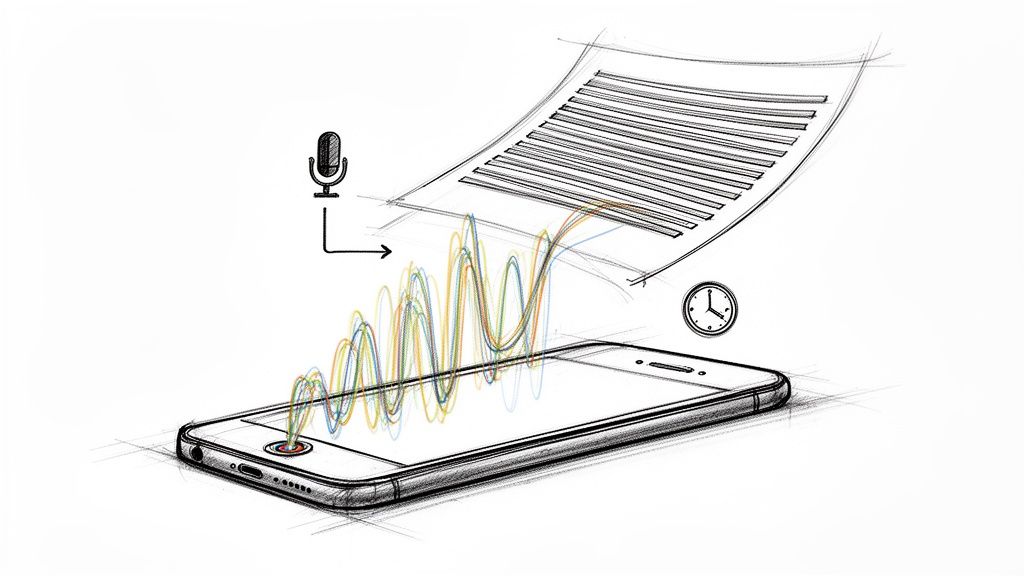

This powerful ability to turn chaotic audio into clean, usable text is a genuine game-changer. For anyone looking to streamline their workflow, knowing how to put this technology to work is a massive advantage. To get started, check out our complete guide on how to convert audio to text and see how you can apply it yourself.

What Can Whisper AI Actually Do?

Whisper AI isn't just another speech-to-text program. It’s what happens when you train a model on a massive, diverse slice of the internet. The result is a tool that sets a new standard for what we can expect from automated transcription, built on three powerful pillars: exceptional accuracy, broad multilingual support, and a surprising tolerance for messy audio.

This combination is key. It allows Whisper to step out of the pristine, studio-recorded environment and function effectively in the real world—where meetings have background chatter, interviews are done over spotty connections, and audio is anything but perfect. It’s a significant leap from older software that would fall apart under these conditions.

Exceptional Accuracy and Contextual Understanding

The first thing that strikes you when using Whisper AI is just how right it gets things. It consistently delivers transcripts with a very low word error rate, even when dealing with specialized or technical jargon. That's because it’s not just matching sounds to a dictionary; it’s actually understanding the context of the conversation.

Think about a medical discussion. An older tool might get confused between similar-sounding words like "hypotension" and "hypertension." Whisper, however, can often figure out the correct term based on the other words and concepts in the sentence. This is the direct result of being trained on such a huge dataset—it behaves less like a stenographer and more like an attentive listener.

From my experience, Whisper's magic lies in its ability to navigate ambiguity. It isn't just transcribing words; it's interpreting audio using a deep, probabilistic grasp of how language actually works.

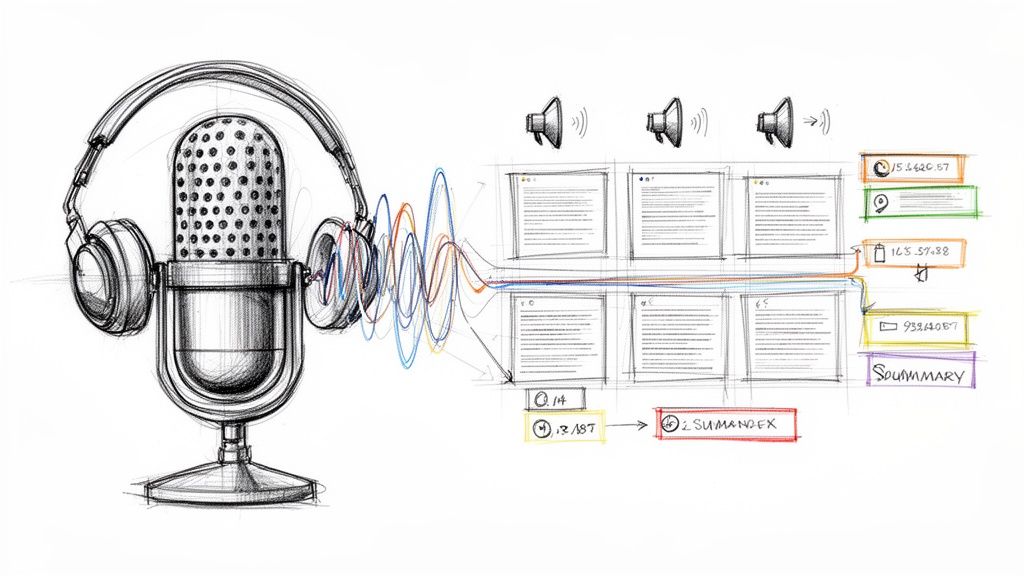

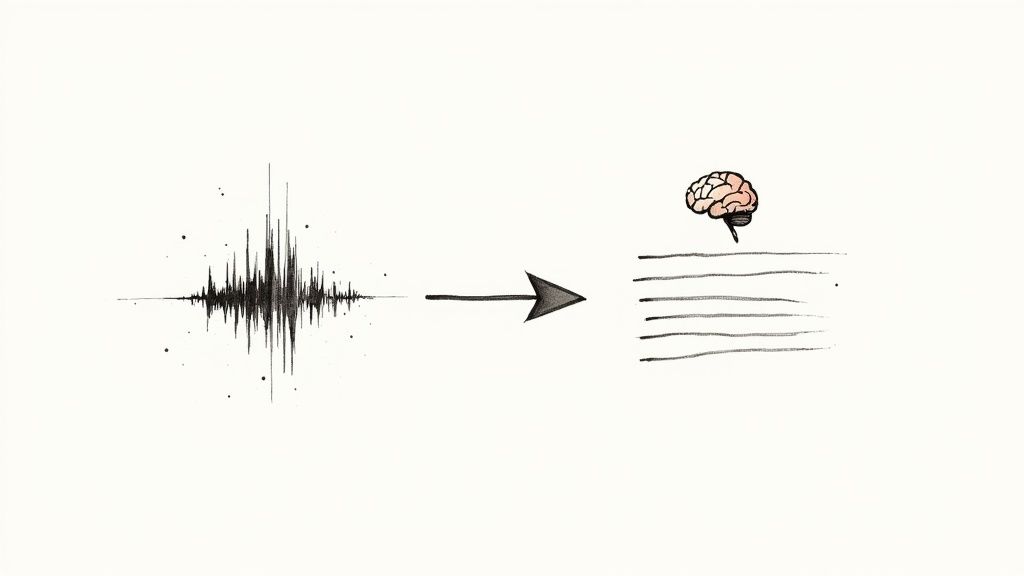

The process behind this is, at a high level, quite elegant. It takes raw audio and uses its sophisticated architecture to turn it into clean, structured text.

This workflow—moving from the sound wave to an encoder that processes it, and then to a decoder that writes it out—is the powerhouse driving its incredible precision.

Transcribe and Translate in Nearly 100 Languages

Because Whisper AI was trained on audio from all over the world, it has a built-in, almost effortless ability to handle multiple languages. It can both transcribe and translate audio across nearly 100 different languages, right out of the box.

This is a game-changer for so many people:

- Journalists can conduct an interview in Spanish and get an accurate English transcript without missing a beat.

- YouTube creators can generate subtitles for their videos in French, German, and Japanese to connect with a global audience.

- Global teams can transcribe their international conference calls, making sure everyone is on the same page regardless of their native tongue.

You don't need to fiddle with language packs or complicated settings. It just works.

Handles Background Noise Like a Pro

Let's be honest: most audio isn't recorded in a soundproof booth. Real-world recordings are full of challenges like:

- The hum of a coffee shop or passing traffic

- People talking over each other

- Echoes from a poorly-mic'd conference room

This is where most transcription tools stumble, spitting out gibberish. Whisper AI, on the other hand, is remarkably good at tuning out the chaos and locking onto the human voice. I've personally used it to pull a clear conversation out of a noisy café, and it accurately captured a lecture recorded from the back of a huge auditorium. This resilience makes it a seriously practical tool for anyone who can’t control their recording environment.

Real-World Applications of Whisper AI

Any new technology is only as good as what it can actually do for people. While the inner workings of Whisper AI are fascinating, its real magic is in the practical problems it solves every day. From hectic newsrooms to quiet clinics, its knack for turning spoken words into accurate text is fundamentally changing how people get their work done.

What was once a tedious, time-sucking chore—transcription—is now becoming an automated background task. Those saved hours are immediately put back into more important work, whether that's polishing a video edit, analyzing legal briefs, or simply giving a patient more focused attention.

Empowering Journalists And Content Creators

If you've ever been a journalist on assignment, you know that getting a great interview is only half the job. You’re often recording in less-than-ideal conditions—a busy street, a windy park—which makes transcribing that audio later a real headache. Whisper AI cuts through that noise, delivering a clean, accurate transcript in minutes instead of hours.

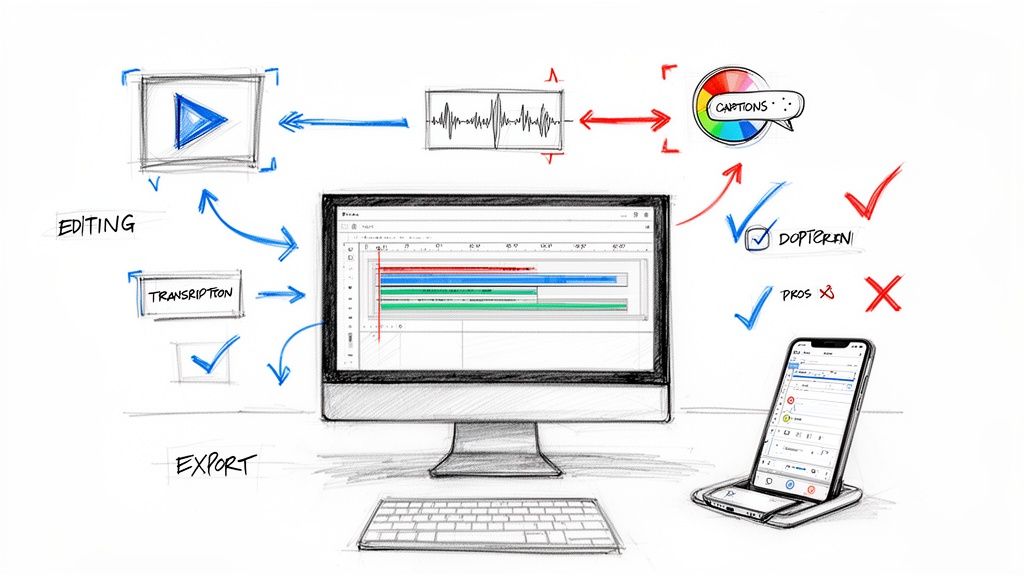

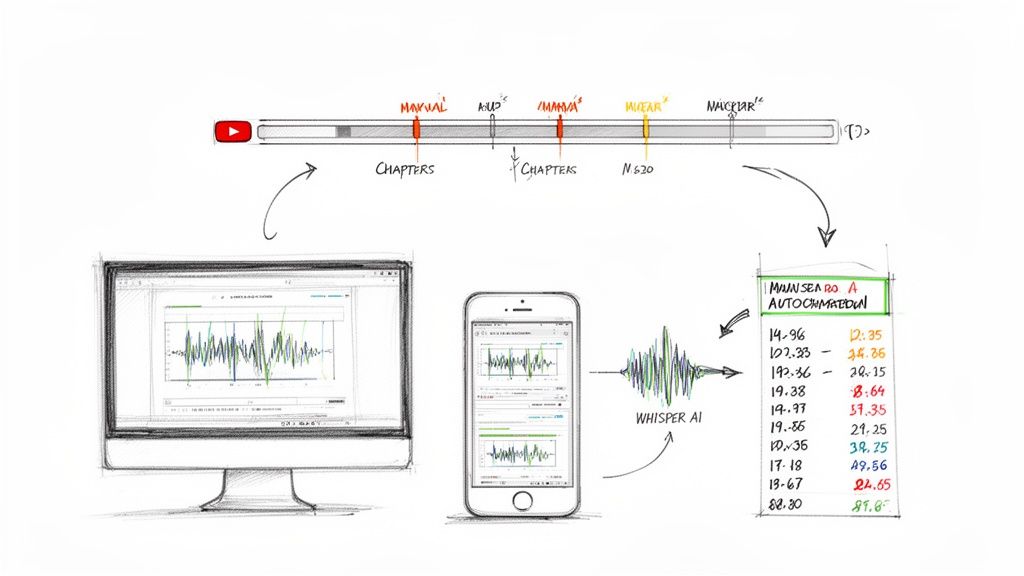

Content creators, especially YouTubers, are in a similar boat. Good captions are non-negotiable for accessibility and keeping viewers engaged, but doing them by hand is a slow, painful process.

- Boost Accessibility: With automatic captions, content becomes instantly accessible to hearing-impaired viewers.

- Increase Reach: Easily generate subtitles for different languages, opening up videos to a global audience.

- Improve SEO: Search engines can crawl the text in your captions, making your videos much easier to find.

Whisper AI is a powerhouse for audio, but it's worth exploring other essential AI tools for content creators to round out your production workflow.

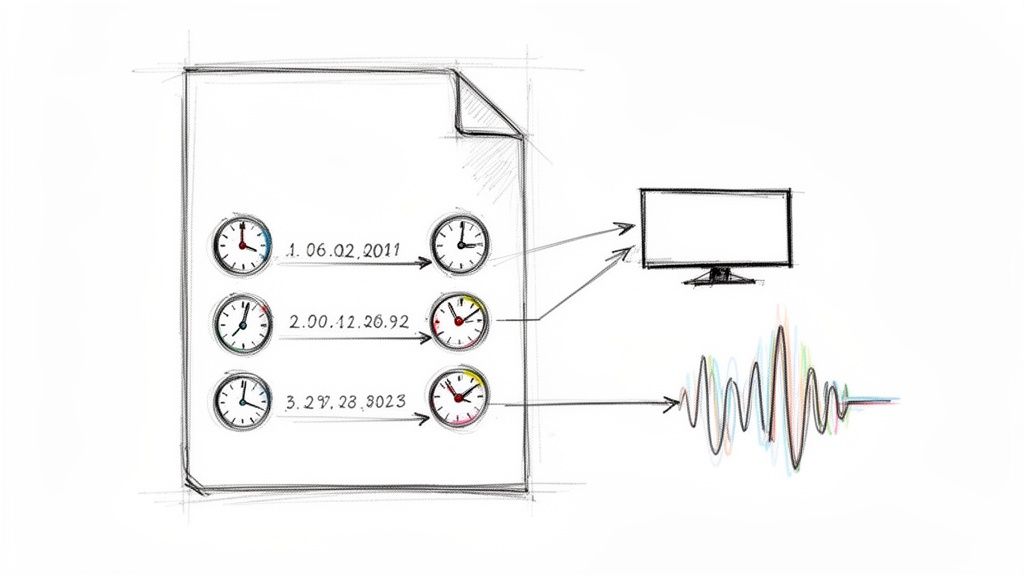

Transforming Healthcare And Legal Workflows

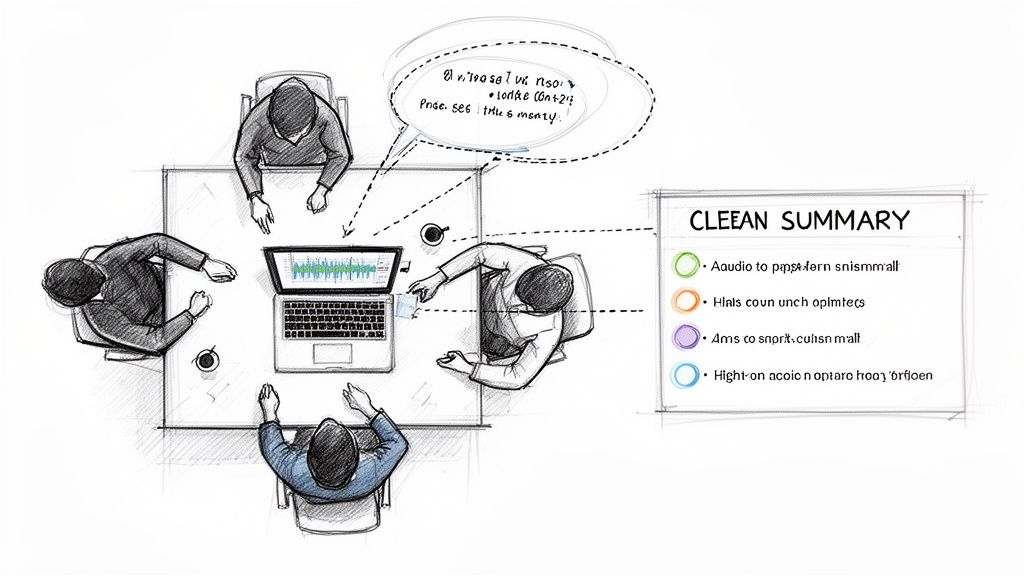

In high-stakes professions like medicine and law, every word matters. Precision is everything, and Whisper AI is making a huge difference by improving how critical records are created and managed.

The ability to reliably convert complex spoken dialogue into searchable text is not just a convenience; it's a fundamental improvement to information management in critical sectors.

In a clinical setting, doctors can use it to transcribe patient consultations on the fly. This allows them to focus completely on the patient instead of being glued to a keyboard, leading to better notes and better care. Similarly, in the legal field, court proceedings and depositions can be transcribed almost in real-time. This creates a searchable digital record that dramatically speeds up case review and research.

The engine driving this is a sophisticated speech-to-text AI system. If you want to dive deeper into how this technology works, you can explore our detailed guide on speech-to-text AI. Understanding the basics really helps you appreciate how powerful it is in these specialized fields.

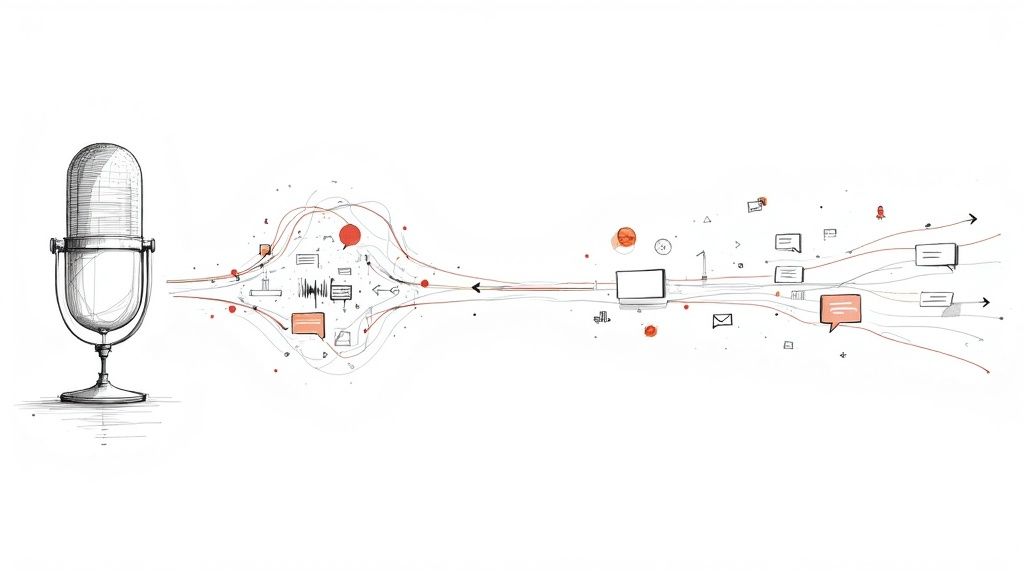

Building The Next Generation Of Apps

Finally, developers are grabbing Whisper's open-source model and running with it, building all sorts of creative, voice-enabled applications. Think hands-free controls for productivity apps or interactive voice assistants for learning software.

Because Whisper is so powerful and accessible, it gives developers a solid foundation to build features that, just a few years ago, would have been far too complex or expensive to even consider.

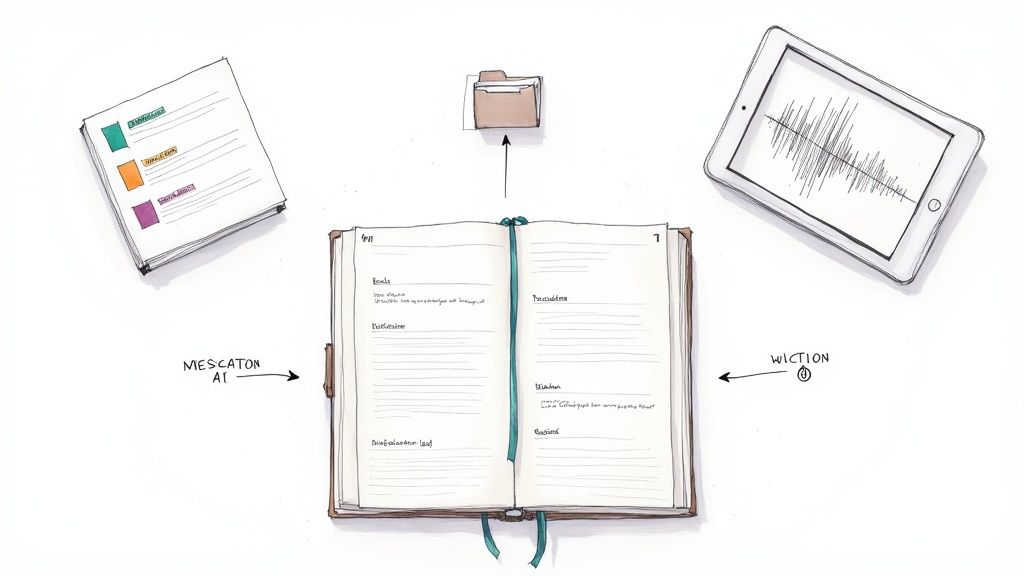

How to Start Using Whisper AI

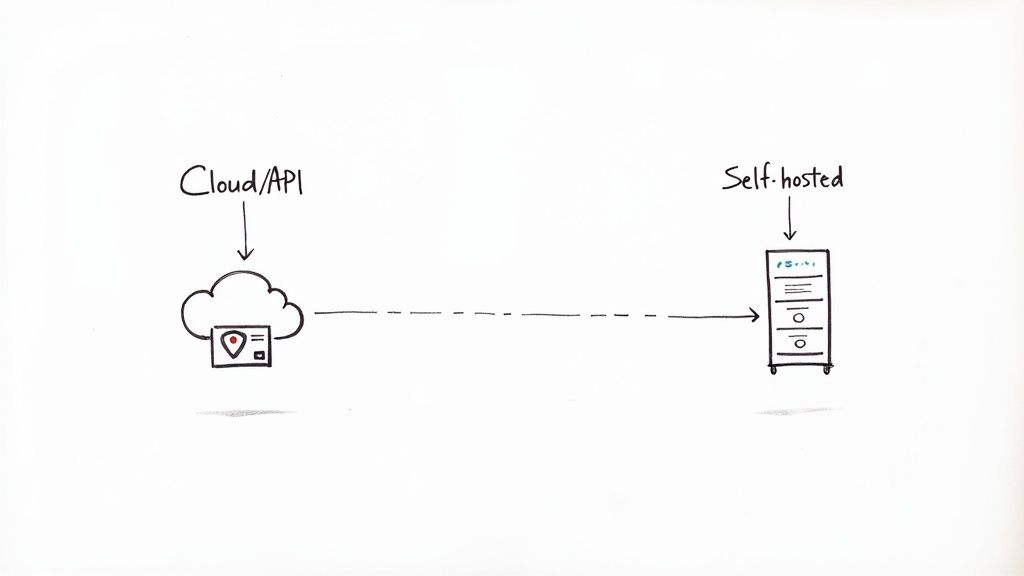

Getting your hands dirty with Whisper AI is probably easier than you think. You’ve really got two main ways to go about it. The path you choose will boil down to your technical skills, what you're willing to spend, and how tightly you need to control your data.

Each route has its own set of perks, serving everyone from a casual user who just needs a quick transcript to a developer building a complex application.

Using the OpenAI API

The most straightforward way in is through the official OpenAI API. This is the perfect "plug-and-play" option if you want a simple, pay-as-you-go service without messing with servers or installations. It’s built for convenience, letting you slot powerful transcription into your apps or workflows with just a bit of code.

To get started, you just need an OpenAI account and an API key. Once you have those, you can start sending audio files over for transcription. It’s fast, reliable, and since you only pay for what you use, it scales beautifully.

This approach is a great fit for:

- Small Businesses: Adding transcription to customer support software.

- Content Creators: Generating subtitles for videos in a snap.

- Developers: Quickly building and testing new voice features in an app.

The API handles all the heavy lifting behind the scenes, so you can just focus on the final product without needing your own beefy hardware.

Running Whisper Locally on Your Own Machine

The second path is for the tinkerers and those who prioritize privacy and control above all else: running the open-source Whisper AI models directly on your own computer. This is a favorite for developers, researchers, and anyone who wants to ensure their audio files never leave their possession.

When you host it yourself, you're in complete command. There are no per-minute fees, which can add up to huge savings if you’re transcribing a lot of audio. Of course, this route has some technical hurdles.

Self-hosting Whisper puts you in the driver's seat. It offers unparalleled privacy and cost-efficiency for large-scale projects, but it requires a solid technical foundation to set up and maintain.

You’ll need a machine with a decent GPU (Graphics Processing Unit), as the more powerful models are computationally hungry. The setup process means getting comfortable with tools like Python and the command line. While it takes more work to get going, the payoff in control and long-term cost savings is a game-changer for the right kind of user.

For a more hands-on walkthrough, our guide on how to convert audio files to text lays out the practical steps to get your transcription workflow up and running.

Whisper AI Pricing and Data Privacy: What You Need to Know

Before you jump into any new tool, two questions always bubble to the surface: what’s the damage to my wallet, and is my data going to be secure? With Whisper AI, the answers to both really depend on which path you take: the easy-to-use API or the self-hosted open-source model.

If you go with the official OpenAI API, you’re looking at a straightforward pay-as-you-go system. You’re billed for every minute of audio you process, which makes costs predictable. Need to transcribe a 60-minute podcast? You'll know exactly what you’re paying based on that per-minute rate.

This model is ideal for anyone who wants to get up and running immediately without fussing with servers or installations. There are no monthly fees or upfront commitments, so it’s a really flexible choice for projects big and small.

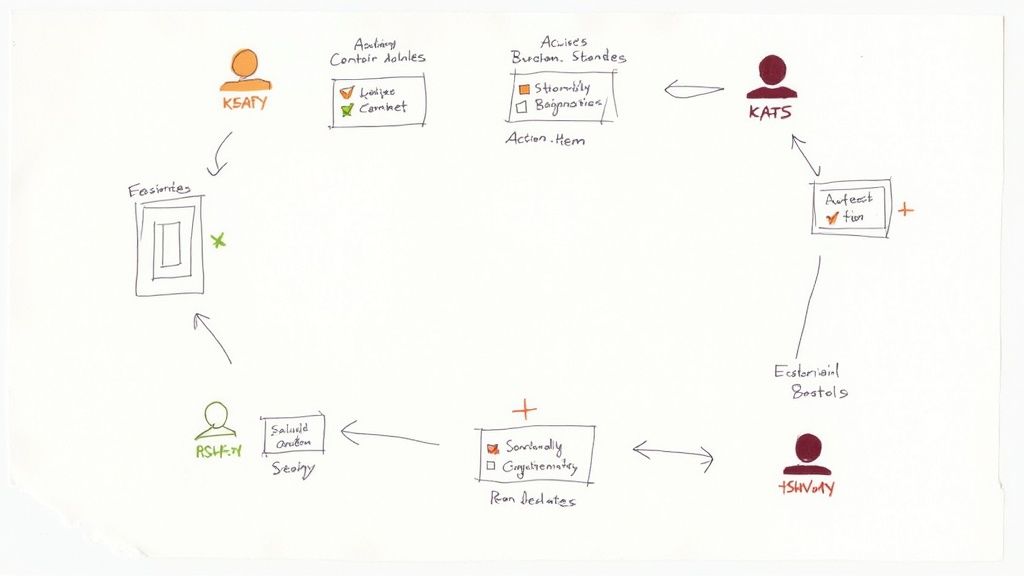

How Your Data is Handled

For a lot of people, data privacy isn't just a checkbox; it's a dealbreaker. When you upload an audio file to the OpenAI API, it travels to their servers to be transcribed. OpenAI is clear in their policy that they do not use API data to train their models, which is a huge plus.

That said, they do temporarily hold onto your files for a short time to monitor for any misuse before they're deleted. This is pretty standard for cloud services, but it’s something you absolutely need to be aware of, especially if you’re working with sensitive information.

Choosing between the API and self-hosting is a direct trade-off. You can prioritize either the plug-and-play convenience of the API or the absolute data sovereignty of a local open-source setup.

If your work demands iron-clad privacy—where audio files can't ever leave your own network—then running the open-source Whisper AI models is your only real option. Self-hosting puts you in complete control because every bit of processing happens on your own hardware. This setup guarantees no third party ever touches your data, giving you the ultimate peace of mind. While you’ll skip the per-minute fees, you will have to invest in the right hardware and have the technical know-how to get it all set up.

Common Questions About Whisper AI

As you start to get a feel for what Whisper AI can do, a few key questions usually pop up. Getting these sorted out will give you a much clearer picture of where the technology really shines and what its current limits are.

Let's dig into some of the most common queries, from how it stacks up against the competition to whether it can tell who's talking.

How Does Whisper Compare to Other Transcription Services?

The big differentiator for Whisper is its raw accuracy. It was trained on an enormous and incredibly diverse dataset, which is why it's so good at understanding different accents, languages, and even audio with background noise.

You might find that services like Google Speech-to-Text have more bells and whistles for enterprise needs, like real-time streaming. But when it comes to the core task of getting the words right, especially with messy, real-world audio, Whisper often comes out on top right out of the box.

Can Whisper AI Identify Different Speakers?

In short, no. Whisper AI isn't built for speaker diarization—the technical term for figuring out who is speaking and when. Its sole focus is transcribing what was said with the highest possible accuracy.

If you need to know who said what, you'll have to use another tool. The typical workflow is to run your audio through Whisper to get the transcript, then feed that output into a separate diarization model to match the text to the individual speakers.

What Are the Main Limitations?

Whisper is impressive, but it’s not a magic bullet. Here are a few things to keep in mind:

- No Live Transcription: It processes audio in chunks, so it's not designed for real-time (live) use cases like captioning a live stream.

- No Speaker Labels: As we just covered, it can't tell you who is speaking without help from another tool.

- Potential "Hallucinations": Sometimes, during long pauses or when it encounters pure noise, the model can invent text that isn't there. It's an occasional quirk to watch out for.

- It's Power-Hungry: To get the best results, you need to run the largest models, and those require some serious computing power. Running them effectively on a standard laptop can be a real challenge.

Ready to transform your audio and video into accurate, actionable text? Whisper AI offers a powerful, easy-to-use platform that handles transcription, summarization, and speaker detection in over 92 languages. Try it for free today!